M3ED: MULTI-ROBOT, MULTI-SENSOR, MULTI-ENVIRONMENT EVENT DATASET

UNIVERSITY OF PENNSYLVANIA

Kenneth Chaney, Fernando Cladera, Ziyun Wang, Anthony Bisulco, M. Ani Hsieh, Christopher Korpela, Vijay Kumar, Camillo J. Taylor, Kostas Daniilidis

ABSTRACT

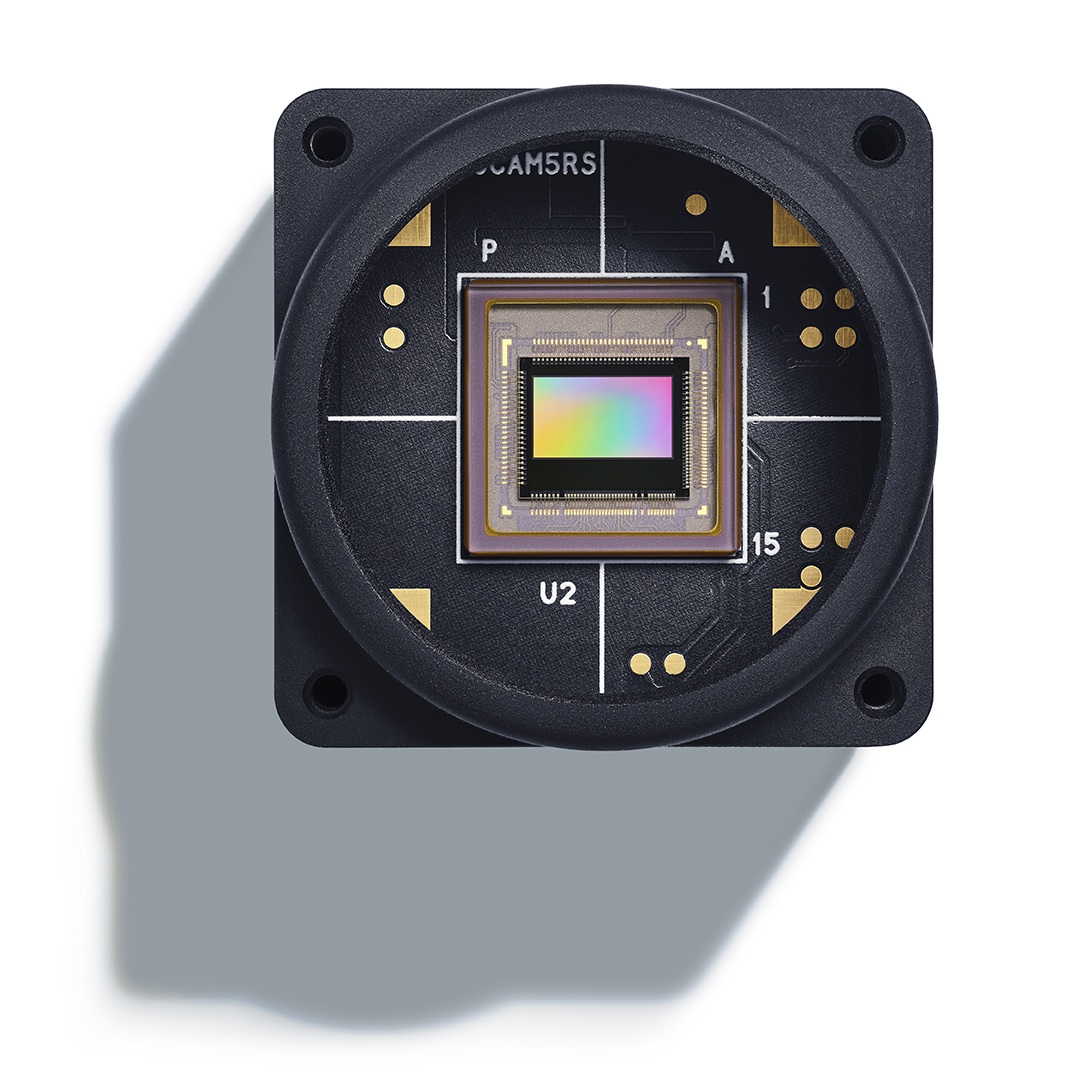

We present M3ED, the first multi-sensor event camera dataset focused on high-speed dynamic motions in robotics applications. M3ED provides high-quality synchronized and labeled data from multiple platforms, including ground vehicles, legged robots, and aerial robots, operating in challenging conditions such as driving along off-road trails, navigating through dense forests, and executing aggressive flight maneuvers. Our dataset also covers demanding operational scenarios for event cameras, such as scenes with high egomotion and multiple independently moving objects. The sensor suite used to collect M3ED includes high-resolution stereo event cameras (1280×720), grayscale imagers, an RGB imager, a high-quality IMU, a 64-beam LiDAR, and RTK localization. This dataset aims to accelerate the development of event-based algorithms and methods for edge cases encountered by autonomous systems in dynamic environments.