LIVE DEMONSTRATION: INTEGRATING EVENT BASED HAND TRACKING INTO TOUCHFREE INTERACTIONS

ULTRALEAP

Ryan Page

ABSTRACT

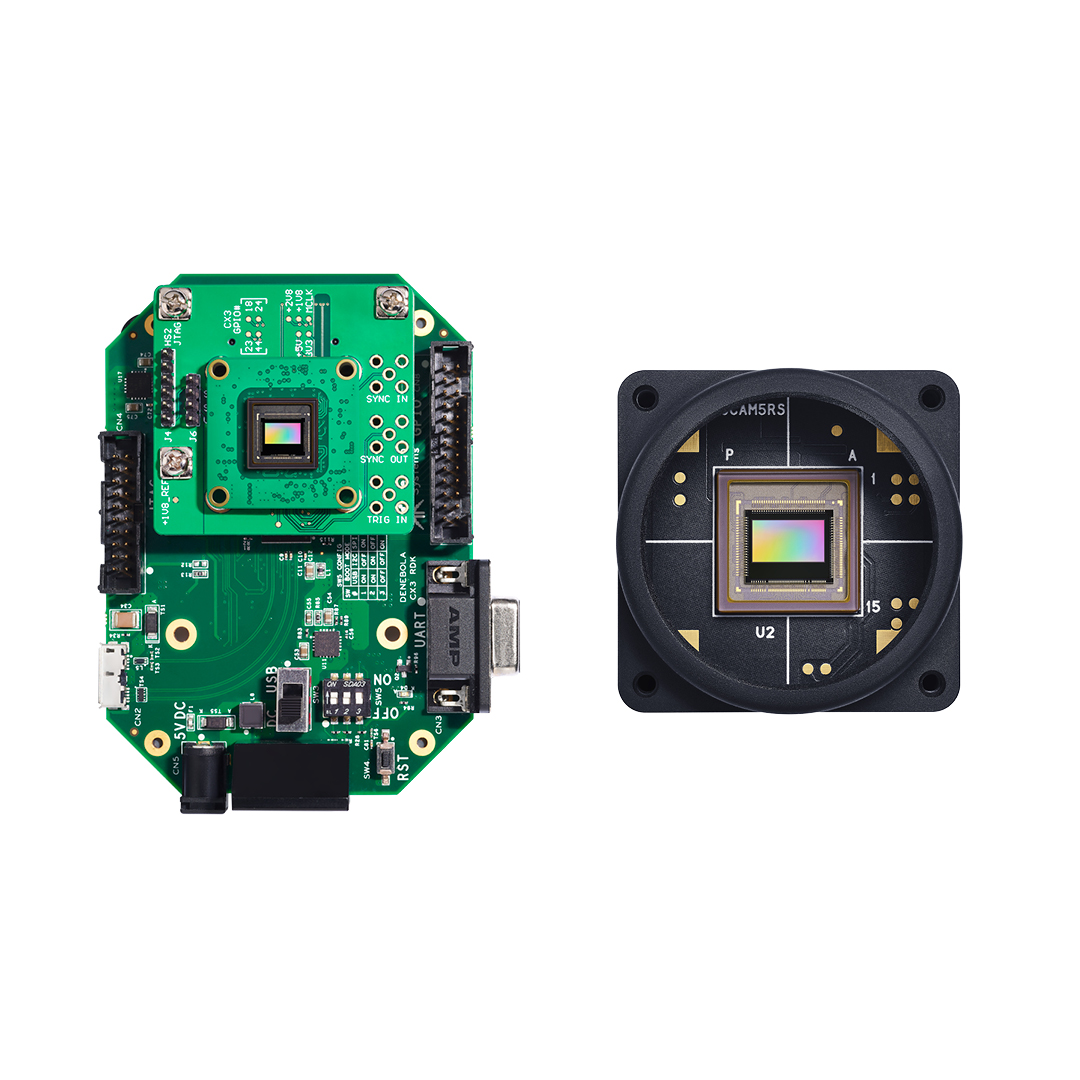

Hand tracking is becoming ever more prominent as an intuitive way to interact with digital content. There are however technical challenges at the sensing level, these include environmental robustness, power and system latency to name three. Event cameras have the potential to solve these, with their asynchronous readout, low power and high dynamic range. To explore the potential of event cameras, Ultraleap have developed a prototype stereo camera using two Prophesee IMX636ES sensors. To go from event data to hand positions the event data is aggregated into event frames. This is then consumed by a hand tracking model which outputs 28 joint positions for each hand with respect to the camera. This data is used by Ultraleap’s TouchFree application to determine a users interaction with a 2D display. This is brought together in the Ball Pit demo shown in the supplementary material, where the user is using their hands to remove balls from a box.