MONOCULAR EVENT-BASED VISION FOR OBSTACLE AVOIDANCE WITH A QUADROTOR

UNIVERSITY OF PENNSYLVANIA, UNIVERSITY OF ZURICH

Anish Bhattacharya, Marco Cannici, Nishanth Rao, Yuezhan Tao, Vijay Kumar, Nikolai Matni, Davide Scaramuzza

ABSTRACT

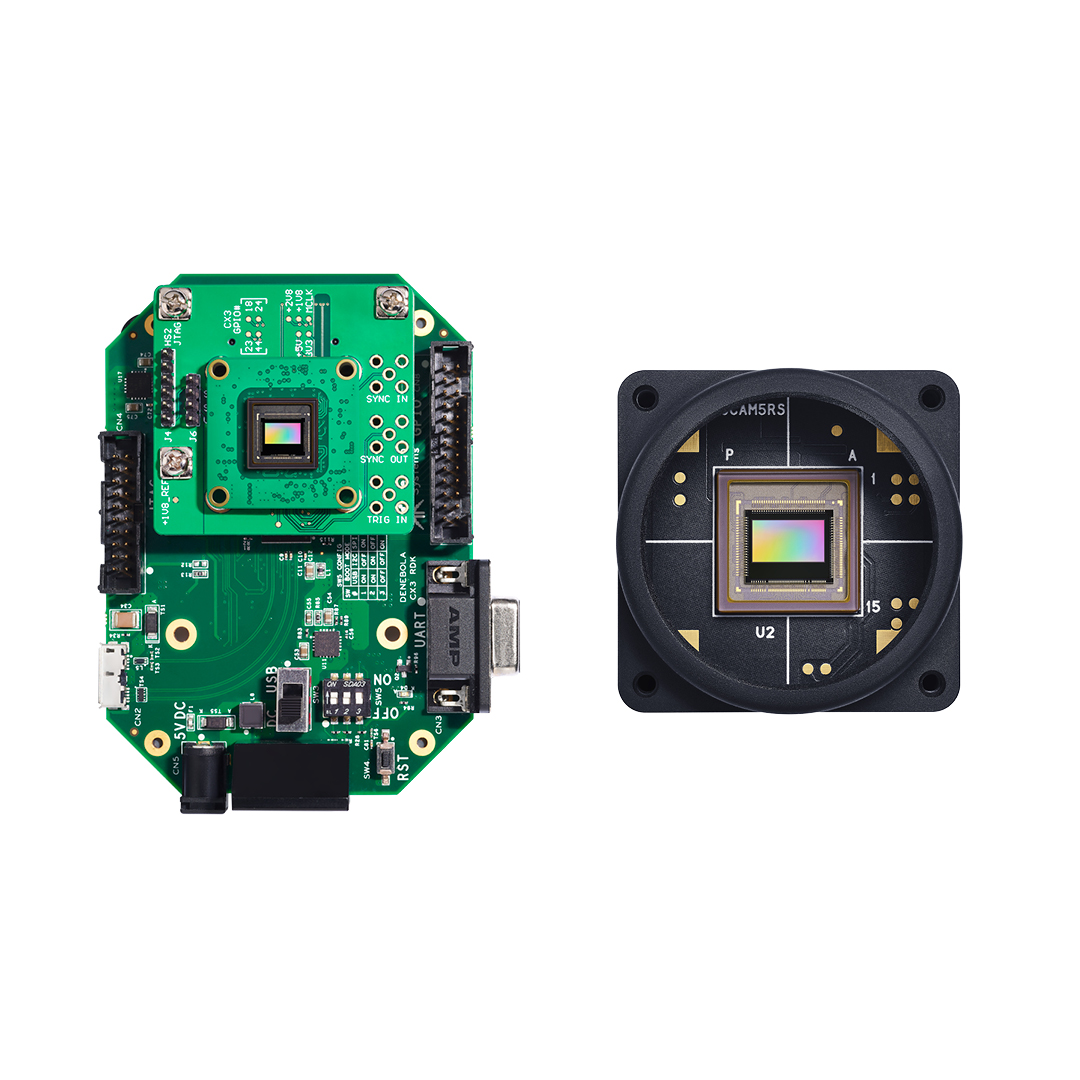

We present the first events-only static-obstacle avoidance method for a quadrotor with just an onboard, monocular event camera. Quadrotors are capable of fast and agile flight in cluttered environments when piloted manually, but vision-based autonomous flight in unknown environments is difficult in part due to sensor limitations of onboard cameras. Event cameras promise nearly zero motion blur and high dynamic range, but produce a very large volume of events under significant ego-motion and further lack a continuous-time sensor model in simulation, making direct sim-to-real transfer not possible. By leveraging depth prediction as an intermediate step in our learning framework, we can pre-train a reactive obstacle avoidance events-to-control policy in simulation, and then fine-tune the perception component with limited events-depth real-world data to achieve dodging in indoor and outdoor settings. We demonstrate this across two quadrotor-event camera platforms in multiple settings, and find, perhaps counter-intuitively, that low speeds (1m/s) make dodging harder and more prone to collisions, while high speeds (5m/s) result in better depth estimation and dodging. We also find that success rates in outdoor scenes can be significantly higher than certain indoor scenes.