EVENT-BASED MOTION-ROBUST ACCURATE SHAPE ESTIMATION FOR MIXED REFLECTANCE SCENES

RICE UNIVERSITY, NORTHWESTERN UNIVERSITY, UNIVERSITY OF ARIZONA

Aniket Dashpute, Jiazhang Wang, James Taylor, Oliver Cossairt, Ashok Veeraraghavan, Florian Willomitzer

ABSTRACT

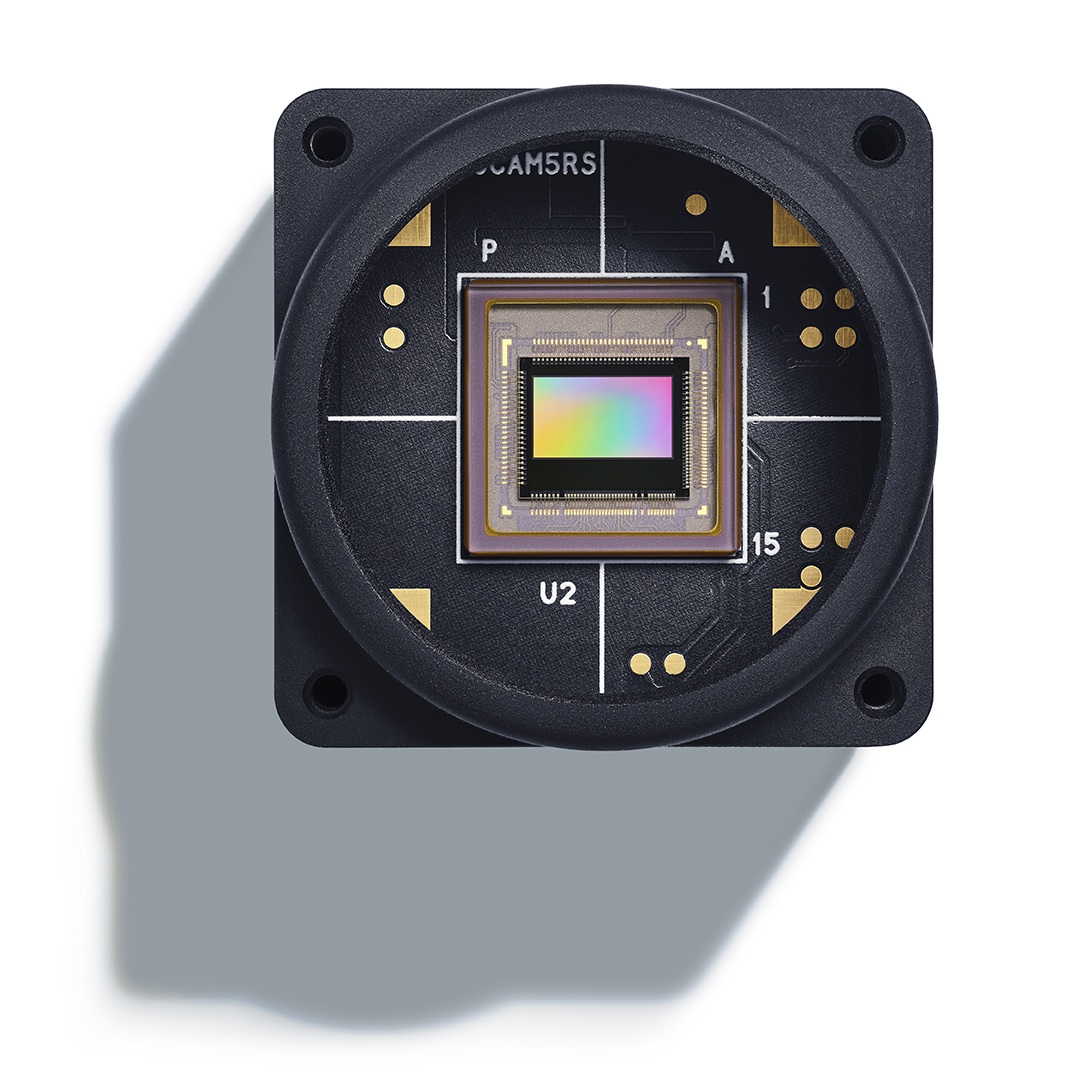

Event-based structured light systems have recently been introduced as an exciting alternative to conventional frame-based triangulation systems for the 3D measurements of diffuse surfaces. Important benefits include the fast capture speed and the high dynamic range provided by the event camera – albeit at the cost of lower data quality. So far, both low-accuracy event-based as well as high-accuracy frame-based 3D imaging systems are tailored to a specific surface type, such as diffuse or specular, and can not be used for a broader class of object surfaces (“mixed reflectance scenes”). In this paper, we present a novel event-based structured light system that enables fast 3D imaging of mixed reflectance scenes with high accuracy. On the captured events, we use epipolar constraints that intrinsically enable decomposing the measured reflections into diffuse, two-bounce specular, and other multi-bounce reflections. The diffuse objects in the scene are reconstructed using triangulation. Eventually, the reconstructed diffuse scene parts are used as a “display” to evaluate the specular scene parts via deflectometry. This novel procedure allows us to use the entire scene as a virtual screen, using only a scanning laser and an event camera. The resulting system achieves fast and motion-robust (14Hz) reconstructions of mixed reflectance scenes with < 500 μm accuracy. Moreover, we introduce a “superfast” capture mode (250Hz) for the 3D measurement of diffuse scenes.