EVIMO2: AN EVENT CAMERA DATASET FOR MOTION SEGMENTATION, OPTICAL FLOW, STRUCTURE FROM MOTION, AND VISUAL INERTIAL ODOMETRY IN INDOOR SCENES WITH MONOCULAR OR STEREO ALGORITHMS

UNIVERSITY OF MARYLAND

Levi Burner, Anton Mitrokhin, Cornelia Fermüller, Yiannis Aloimonos

ABSTRACT

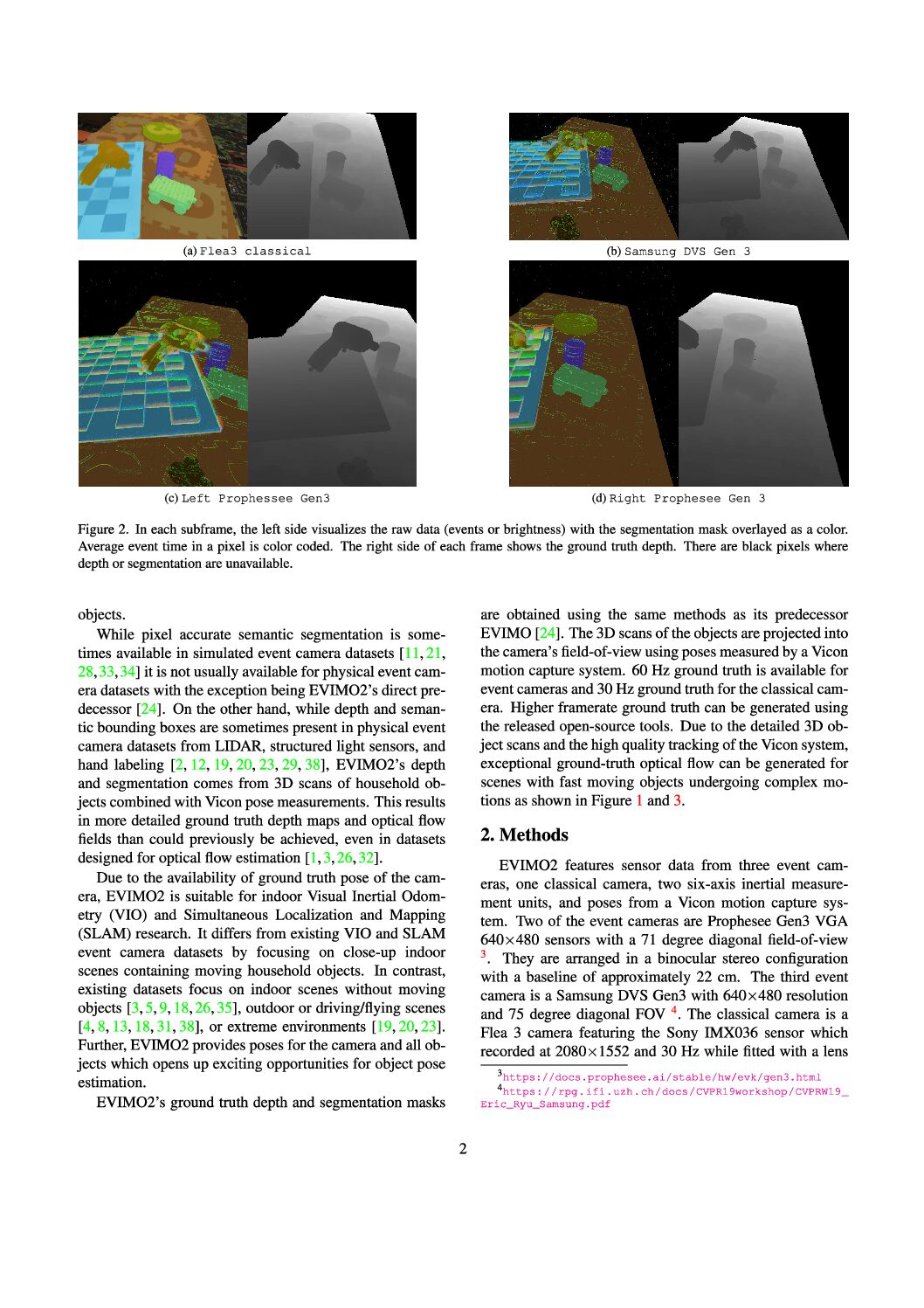

A new event camera dataset, EVIMO2, is introduced that improves on the popular EVIMO dataset by providing more data, from better cameras, in more complex scenarios. As with its predecessor, EVIMO2 provides labels in the form of per-pixel ground truth depth and segmentation as well as camera and object poses. All sequences use data from physical cameras and many sequences feature multiple independently moving objects. Typically, such labeled data is unavailable in physical event camera datasets. Thus, EVIMO2 will serve as a challenging benchmark for existing algorithms and rich training set for the development of new algorithms. In particular, EVIMO2 is suited for supporting research in motion and object segmentation, optical flow, structure from motion, and visual (inertial) odometry in both monocular or stereo configurations. EVIMO2 consists of 41 minutes of data from three 640