COMPLEMENTING EVENT STREAMS AND RGB FRAMES FOR HAND MESH RECONSTRUCTION

PEKING UNIVERSITY, CHINESE ACADEMY OF SCIENCES, UNIVERSITY OF CHINESE ACADEMY OF SCIENCES

Jianping Jiang, Xinyu Zhou, Bingxuan Wang, Xiaoming Deng, Chao Xu, Boxin Shi

ABSTRACT

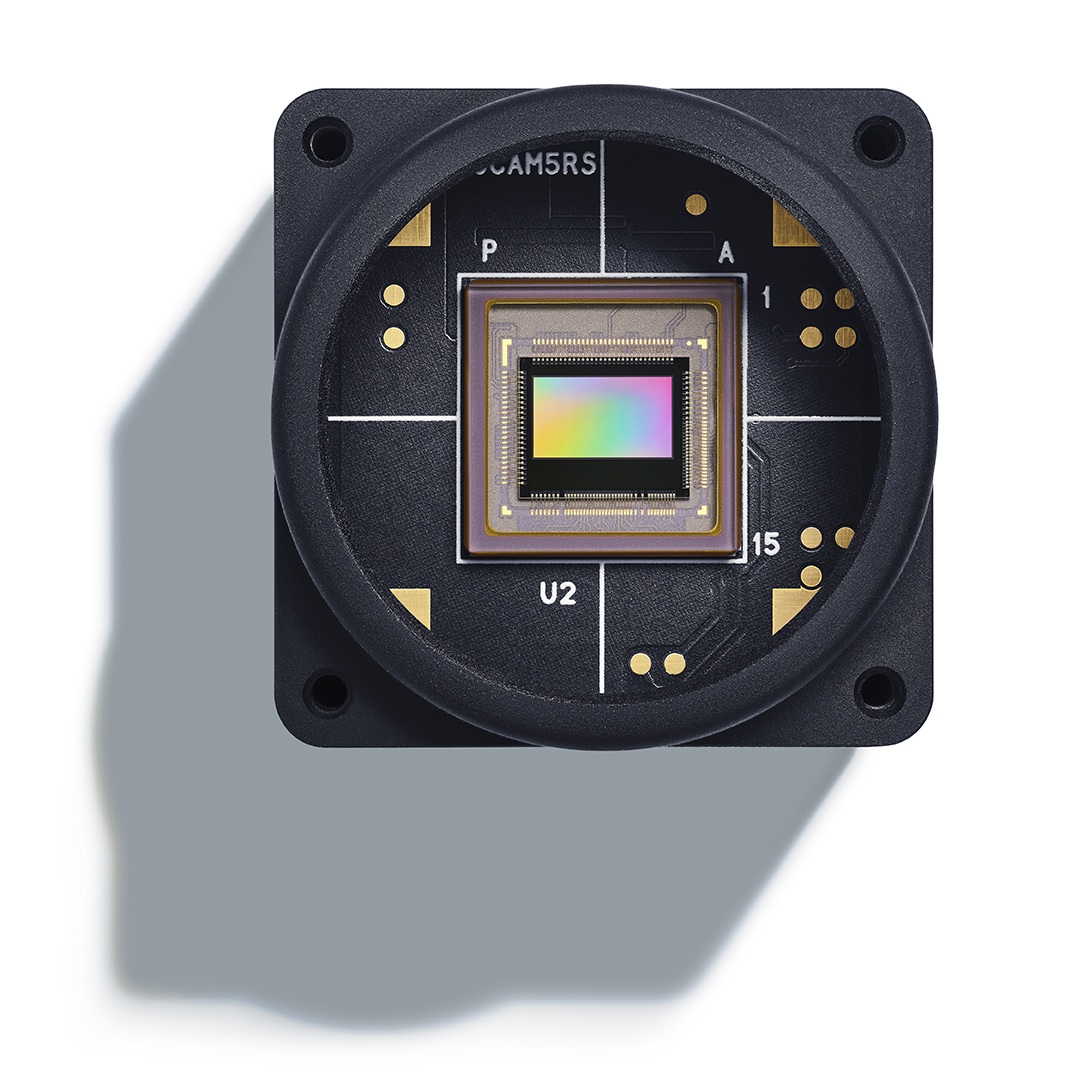

Reliable hand mesh reconstruction (HMR) from commonly-used color and depth sensors is challenging especially under scenarios with varied illuminations and fast motions. Event camera is a highly promising alternative for its high dynamic range and dense temporal resolution properties, but it lacks salient texture appearance for hand mesh reconstruction. In this paper, we propose EvRGBHand – the first approach for 3D hand mesh reconstruction with an event camera and an RGB camera compensating for each other. By fusing two modalities of data across time, space, and information dimensions, EvRGBHand can tackle overexposure and motion blur issues in RGB-based HMR and foreground scarcity as well as background overflow issues in event-based HMR. We further propose EvRGBDegrader, which allows our model to generalize effectively in challenging scenes, even when trained solely on standard scenes, thus reducing data acquisition costs. Experiments on real-world data demonstrate that EvRGBHand can effectively solve the challenging issues when using either type of camera alone via retaining the merits of both, and shows the potential of generalization to outdoor scenes and another type of event camera. For code, models, and dataset, please refer to https://alanjiang98.github.io/evrgbhand.github.io/.