PHOTONIC NEUROMORPHIC ACCELERATORS FOR EVENT-BASED IMAGING FLOW CYTOMETRY

UNIVERSITY OF THE AEGEAN, NATIONAL TECHNICAL UNIVERSITY OF ATHENS, UNIVERSITY OF WEST ATTICA, PROPHESEE

I. Tsilikas, A. Tsirigotis, G. Sarantoglou, S. Deligiannidis, A. Bogris, C. Posch, G. Van den Branden, C. Mesaritakis

ABSTRACT

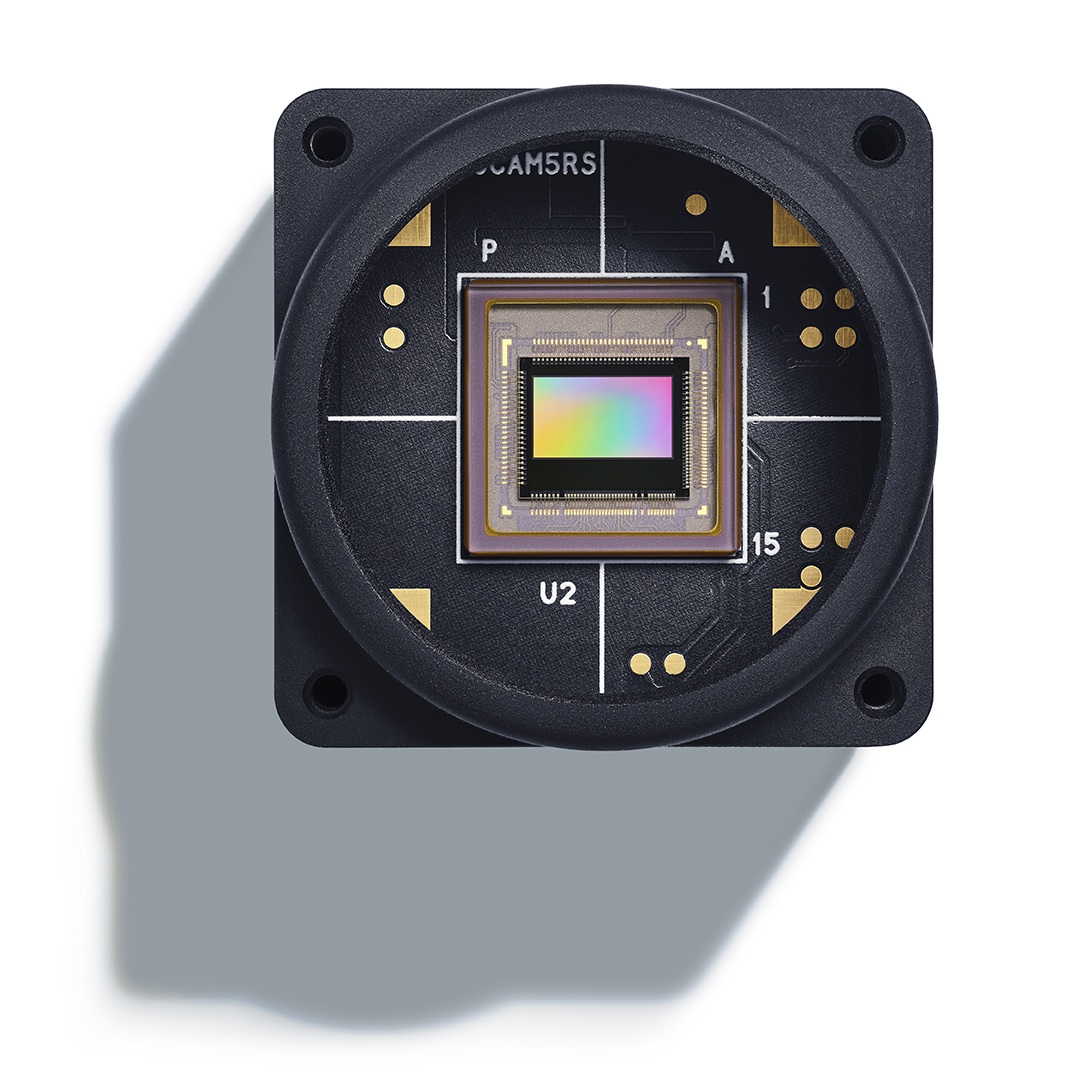

In this work, we present experimental results of a high-speed label-free imaging cytometry system that seamlessly merges the high-capturing rate and data sparsity of an event-based CMOS camera with lightweight photonic neuromorphic processing. This combination offers high classification accuracy and a massive reduction in the number of trainable parameters of the digital machine-learning back-end. The event-based camera is capable of capturing 1 Gevents/sec, where events correspond to pixel contrast changes, similar to the retina’s ganglion cell function. The photonic neuromorphic accelerator is based on a hardware-friendly passive optical spectrum slicing technique that is able to extract meaningful features from the generated spike-trains using a purely analogue version of the convolutional operation. The experimental scenario comprises the discrimination of artificial polymethyl methacrylate calibrated beads, having different diameters, flowing at a mean speed of 0.01m/sec. Classification accuracy, using only lightweight, FPGA-compatible digital machine-learning schemes has topped at 98.2%. On the other hand, by experimentally pre-processing the raw spike data through the proposed photonic neuromorphic spectrum slicer at a rate of 3×106 images per second, we achieved an accuracy of 98.6%. This performance was accompanied by a reduction in the number of trainable parameters at the classification back-end by a factor ranging from 8 to 22, depending on the configuration of the digital neural network. These results confirm that neuromorphic sensing and neuromorphic computing can be efficiently merged to a unified bio-inspired system, offering a holistic enhancement in emerging bio-imaging applications.