TRUSTED BY THOUSANDS OF INDUSTRY PROFESSIONALS

Blur-free

No exposure time

High-Speed

>10k fps time resolution equivalent

1280x720px

Resolution

>120dB

Dynamic Range

0.08 Lux

Low light cutoff

Shutter-free

Neither global nor rolling shutter: async. pixel activation

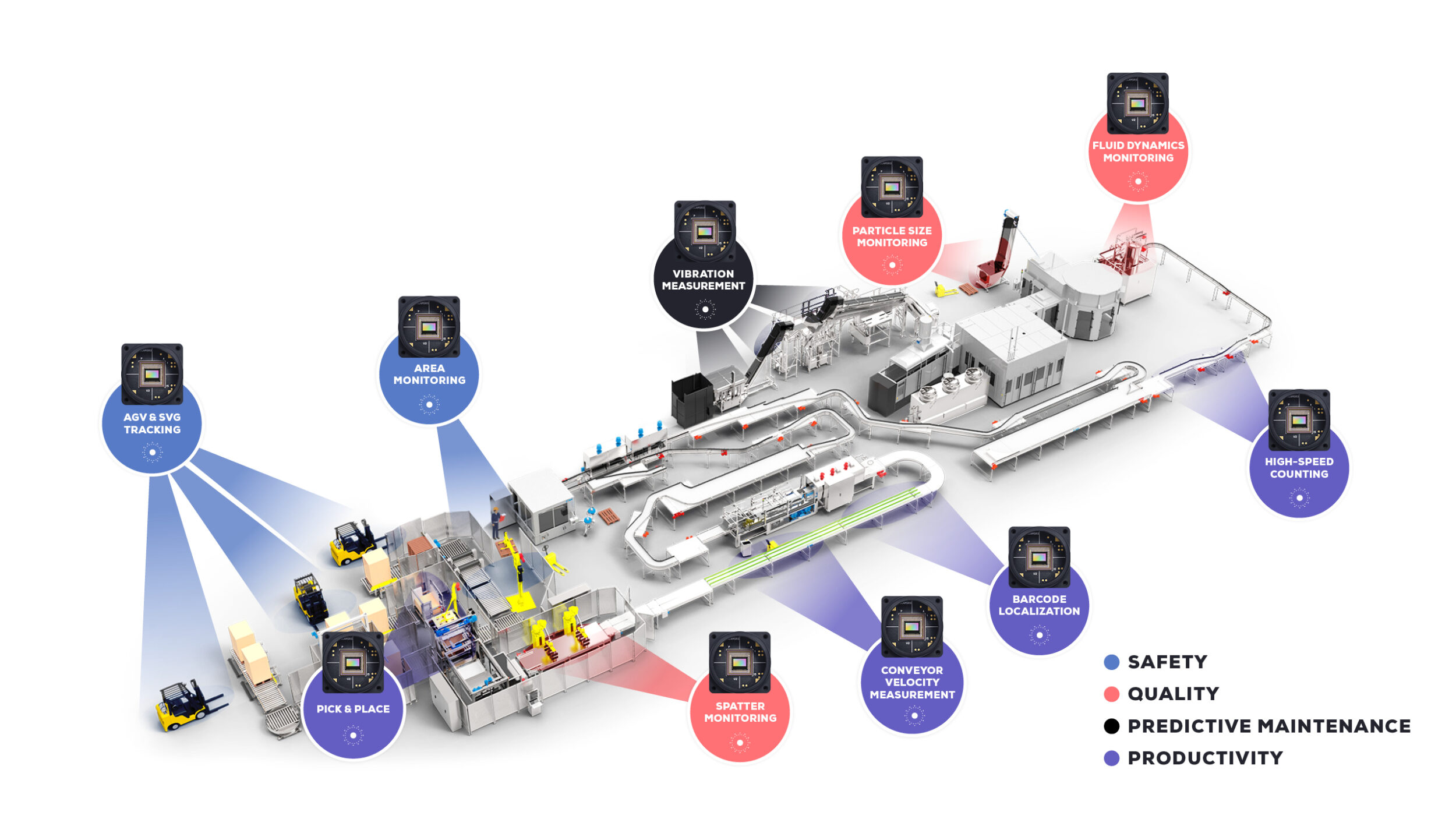

ADD VALUE TO YOUR INDUSTRIAL APPLICATIONS

BOOST PRODUCTIVITY

Boost your productivity by counting and measuring at a rate of >1,000 objects/sec and inspect objects at >10m/s with 100x less data to be processed.

UNPRECEDENTED QUALITY CONTROL

Cut reject rate with real-time feedback loops for advanced processing methods, down to 5us time resolution. Unlock high-speed recognition applications with blur-free asynchronous event output (i.e OCR).

PREDICTIVE MAINTENANCE

Detect early signs of machine failure: Unique ability to measure vibration frequencies from Hz to Khz can predict abnormalities for predictive maintenance, minimizing spare parts inventory and machine downtime.

MONITORING + SAFETY

Monitor in real time areas where workers and machines interact for next-generation safety levels, without capturing images, even in complex lighting environments.

CUT COMPLEXITY & COSTS

Streamline – The Metavision® sensor is frameless, low data rate, high dynamic range and very sensitive in low lighting scenes. These characteristics let you streamline cumbersome processes by removing frame grabbers, industrial PCs, custom illumination from your visual acquisition and processing pipelines.

ONE SENSOR, MANY APPLICATIONS

XYT MOTION ANALYSIS

Typical use cases: Movement analysis – Equipment health monitoring – Machine Behavior monitoring

Discover the power of time – space continuity for your application by visualizing your data with our XYT viewer.

See between the frames

Zoom in time and understand fine motion in the scene

ULTRA SLOW MOTION

Typical use cases: Kinematic Monitoring, Predictive Maintenance

Slow down time, down to the time-resolution equivalent of over 200,000+ frames per second, live, while generating orders of magnitude less data than traditional approaches. Understand the finest motion dynamics hiding in ultra fast and fleeting events.

Over 200,000 fps (time resolution equivalent)

OBJECT TRACKING

Typical use cases: Part pick and place – Robot Guidance – Trajectory monitoring

Track moving objects in the field of view. Leverage the low data-rate and sparse information provided by event-based sensors to track objects with low compute power.

Continuous tracking in time: no more “blind spots” between frame acquisitions

Native segmentation: analyze only motion, ignore the static background

OPTICAL FLOW

Typical use cases: Conveyor Speed Measurement – Part/Object Speed Measurement, Trajectory Monitoring, Trajectory Analysis, Trajectory Anticipation

Rediscover this fundamental computer vision building block, but with an event twist. Understand motion much more efficiently, through continuous pixel-by-pixel tracking and not sequential frame by frame analysis anymore.

17x less power compared to traditional image-based approaches

Get features only on moving objects

HIGH-SPEED COUNTING

Typical use cases: Object counting and gauging – pharmaceutical pill counting – Mechanical part counting

Count objects at unprecedented speeds, high accuracy, generating less data and without any motion blur. Objects are counted as they pass through the field of view, triggering each pixel independently as the object goes by.

>1,000 Obj/s. Throughput

>99.5% Accuracy @1,000 Obj/s.

SPATTER MONITORING

Typical use cases: Traditional milling, laser & process monitoring, Quality prediction

Track small particles with spatter-like motion. Thanks to the high time resolution and dynamic range of our Event-Based Vision sensor, small particles can be tracked in the most difficult and demanding environment.

Up to 200kHz tracking frequency (5µs time resolution)

Simultaneous XYT tracking of all particles

VIBRATION MONITORING

Typical use cases: Motion monitoring, Vibration monitoring, Frequency analysis for predictive maintenance

Monitor vibration frequencies continuously, remotely, with pixel precision, by tracking the temporal evolution of every pixel in a scene. For each event, the pixel coordinates, the polarity of the change and the exact timestamp are recorded, thus providing a global, continuous understanding of vibration patterns.

From 1Hz to kHz range

1 Pixel Accuracy

PARTICLE / OBJECT SIZE MONITORING

Typical use cases: High speed counting, Batch homogeneity & gauging

Control, count and measure the size of objects moving at very high speed in a channel or a conveyor.

Get instantaneous quality statistics in your production line, to control your process.

Up to 500 000 pixels / second speed

99% Counting precision

EDGELET TRACKING

Typical use cases: High speed location, Guiding and fitting for pick & place

Track 3D edges and/or Fiducial markers for your AR/VR application. Benefit from the high temporal resolution of Events to increase accuracy and robustness of your edge tracking application.

Automated 3D object detection with geometrical prior

3D object real-time tracking

VELOCITY & FLUID MONITORING

Typical use cases: Fluid dynamics monitoring, Continous process monitoring of liquid flow

CABLE / YARN VELOCITY & SLIPPING MONITORING

Typical use cases: Yarn quality control, Cable manufacturing monitoring

PLUME MONITORING

Typical use cases: Dispensing uniformity & Coverage control, Quality & efficiency of dispersion, Fluid dynamics analysis for inline process monitoring

NEUROMORPHIC VISION AND TOUCH COMBINED FOR ENHANCED ROBOTIC CAPABILITIES

Researchers at the Collaborative, Learning, and Adaptive Robots (CLeAR) Lab and TEE Research Group at National University of Singapore are taking advantage of the benefits of Prophesee’s Event-Based Vision, in combination with touch, to build new visual-tactile datasets for the development of better learning systems in robotics. The neuromorphic sensor fusion of touch and vision is being used to help robots grip and identify objects.

1000x times faster than human touch

0.08s rotational slip detection

Don’t see a use case that fits?

Our team of experts can provide access to additional libraries of privileged content.

Contact us >

PROPHESEE METAVISION® TECHNOLOGY

SENSING

Inspired by the human retina, Prophesee’s patented Event-based Metavision® sensors feature pixels, each powered by its own embedded intelligent processing, allowing them to activate independently.

SOFTWARE

Award-winning Event-Based software suite offering access to more than 64 algorithms, 105 code samples and 17 tutorials in total, the industry’s widest selection available to date.

CUT TIME TO SOLUTION

EXTENSIVE DOCUMENTATION & SUPPORT

With EVK purchase, get 2H premium support as well as privileged access to our Knowledge Center, including over 110 articles, application notes, in-depth technology discovery material, step-by-step guides.

5X AWARD-WINNING EVENT-BASED VISION SOFTWARE SUITE

Choose from an easy-to-use data visualizer or an advanced API with 64 algorithms, 105 code samples and 17 tutorials.

Get started today with the most comprehensive Event-based Vision software toolkit to date.

OPEN SOURCE ARCHITECTURE

Metavision SDK is based on an open source architecture, unlocking full interoperability between our software and hardware devices and enabling a fast-growing Event-based community.

INDUSTRY NEWS

Prophesee and Tobii partner to develop next-generation event-based eye tracking solution for AR/VR and smart eyewear

This collaboration combines Tobii’s best-in-class eye tracking platform with Prophesee’s pioneering event-based sensor technology. Together, the companies aim to develop an ultra-fast and power-efficient eye-tracking solution, specifically designed to meet the stringent power and form factor requirements of compact and battery-constrained smart eyewear.

Eoptic, Inc. and Prophesee Forge Strategic Partnership to Evolve Multimodal, High-Speed Imaging Systems

Eoptic, Inc., a leader in advanced imaging and optics systems integration, and Prophesee, the global pioneer in neuromorphic vision systems, today announced a strategic collaboration to integrate high-speed event detection into Eoptic’s innovative and flexible prismatic sensor module. By combining Eoptic’s Cambrian Edge imaging platform with Prophesee’s cutting-edge, event-based Metavision® sensors, the partnership aims to tackle real-time imaging challenges and open new frontiers in dynamic visual processing.

PROPHESEE Recap 2024

Recap of all the major announcements, product launches, awards and recognition, press and more in the year 2024.

Q&A

Do I need to buy an EVK to start ?

You don’t necessarily need an Evaluation Kit or Event-based Vision equipment to start your discovery. You can start with Metavision Studio and interact with provided recordings first.

What data do I get from the sensor exactly ?

The sensor will output a continuous stream of data consisting of:

- X and Y coordinates, indicating the location of the activated pixel in the sensor array

- The polarity, meaning if the activated event corresponds to a positive (dark to light) or negative (light to dark) contrast change

- A timestamp “t”, precisely encoding when the event was generated, at the microsecond resolution

For more information, check our pages on Event-based concepts and events streaming and decoding.

How can you be “blur-free” ?

Image blur is mostly caused by movement of the camera or the subject during exposure. This can happen when the shutter speed is too slow or if movements are too fast.

With event-based sensor, there is no exposure but rather a continuous flow of “events” triggered by each pixel independently whenever an illumination change is detected. Hence there is no blur.

Can I also get images in addition to events ?

You can not get images directly from our event-based sensor, but for visualization purposes, you can generate frames from the events.

To do so, events will be accumulated over a period of time (usually the frame period, for example 20ms for 50 FPS) because the number of events that occurred at the precise time T (with a microsecond precision) could be very small.

Then a frame can be initialized with its background color at first (e.g. white) and for each event occurring during the frame period, pixels are stored in the frame.

What can I do with the provided software license ?

The suite is provided under commercial license, enabling you to use, build and even sell your own commercial application at no cost. Read the license agreement here.

What is the frame rate ?

There is no frame rate, our Metavision sensor is neither a global shutter nor a rolling shutter, it is actually shutter-free.

This represents a new machine vision category enabled by a patented sensor design that embeds each pixel with its own intelligence processing. It enables them to activate themselves independently when a change is detected.

As soon as an event is generated, it is sent to the system, continuously, pixel by pixel and not at a fixed pace anymore.

How can the dynamic range be so high ?

The pixels of our event-based sensor contain photoreceptors that detect changes of illumination on a logarithmic scale. Hence it automatically adapts itself to low and high light intensity and does not saturate the sensor as a classical frame-based sensor would do.

I have existing image-based datasets, can I use them to train Event-based models ?

Yes, you can leverage our “Video to Event Simulator”. This is Python script that allows you to transform frame-based image or video into Event-based counterparts. Those event based files can then be used to train Event-based models.