A COMPREHENSIVE PRODUCT RANGE TO FIT YOUR DESIGN NEEDS

OPTICAL FLEX MODULE

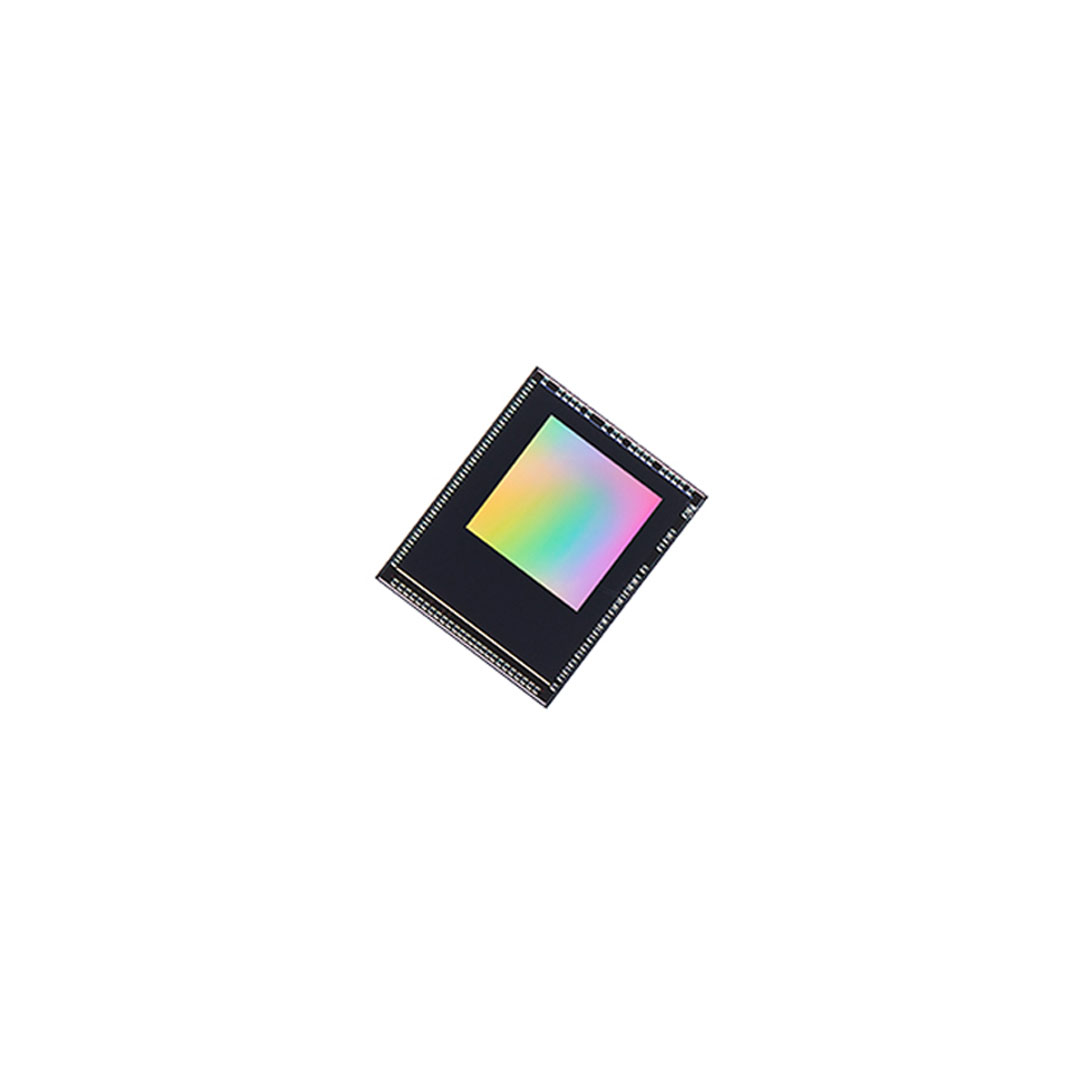

Compact integrated optics module housing 320×320 pixel Event-based Metavision® sensor with embedded features.

EVALUATION KITS

Plug and play, ready to use platform to get a headstart on evaluation. Available in two variants – Chip on board / Optical module

Q&A

Do I need to buy an EVK to start ?

You don’t necessarily need an Evaluation Kit or Event-based Vision equipment to start your discovery. You can start with Metavision Studio and interact with provided recordings first.

What data do I get from the sensor exactly ?

The sensor will output a continuous stream of data consisting of:

- X and Y coordinates, indicating the location of the activated pixel in the sensor array

- The polarity, meaning if the activated event corresponds to a positive (dark to light) or negative (light to dark) contrast change

- A timestamp “t”, precisely encoding when the event was generated, at the microsecond resolution

For more information, check our pages on Event-based concepts and events streaming and decoding.

How can you be “blur-free” ?

Image blur is mostly caused by movement of the camera or the subject during exposure. This can happen when the shutter speed is too slow or if movements are too fast.

With event-based sensor, there is no exposure but rather a continuous flow of “events” triggered by each pixel independently whenever an illumination change is detected. Hence there is no blur.

Can I also get images in addition to events ?

You can not get images directly from our event-based sensor, but for visualization purposes, you can generate frames from the events.

To do so, events will be accumulated over a period of time (usually the frame period, for example 20ms for 50 FPS) because the number of events that occurred at the precise time T (with a microsecond precision) could be very small.

Then a frame can be initialized with its background color at first (e.g. white) and for each event occurring during the frame period, pixels are stored in the frame.

What can I do with the provided software license ?

The suite is provided under commercial license, enabling you to use, build and even sell your own commercial application at no cost. Read the license agreement here.

What is the frame rate ?

There is no frame rate, our Metavision sensor is neither a global shutter nor a rolling shutter, it is actually shutter-free.

This represents a new machine vision category enabled by a patented sensor design that embeds each pixel with its own intelligence processing. It enables them to activate themselves independently when a change is detected.

As soon as an event is generated, it is sent to the system, continuously, pixel by pixel and not at a fixed pace anymore.

How can the dynamic range be so high ?

The pixels of our event-based sensor contain photoreceptors that detect changes of illumination on a logarithmic scale. Hence it automatically adapts itself to low and high light intensity and does not saturate the sensor as a classical frame-based sensor would do.

I have existing image-based datasets, can I use them to train Event-based models ?

Yes, you can leverage our “Video to Event Simulator”. This is Python script that allows you to transform frame-based image or video into Event-based counterparts. Those event based files can then be used to train Event-based models.