Discover Metavision® SDK5 PRO

5x Award-Winning Event-Based Vision Software Toolkit.

Now included with Prophesee USB EVK purchase

Our Metavision® SDK5 PRO software toolkit is now included with USB EVK purchase or available for standalone purchase.

It includes a commercial-grade license, binaries and source code for all modules, 2H premium support and access to extensive knowledge bases.

With Metavision SDK5 PRO, evaluate, design and sell your own Metavision product, leveraging Prophesee Event-Based Metavision technologies.

5X AWARD-WINNING EVENT-BASED VISION SDK

Choose from an easy-to-use data visualizer or an advanced API with 64 algorithms, 105 code samples and 17 tutorials.

Get started today with the most comprehensive Event-Based Vision software toolkit to date.

OPEN SOURCE ARCHITECTURE

Metavision SDK is based on an open source architecture, unlocking full interoperability between our software and hardware devices and enabling a fast-growing Event-Based community.

LEADING EVENT-BASED MACHINE LEARNING TOOLKIT

Build your advanced Event-Based ML network leveraging the most performant object detector to date spotlighted at NeurIPS, the largest HD dataset, Event to Video and Video to Event pipelines, Training, Inference, grading features and more.

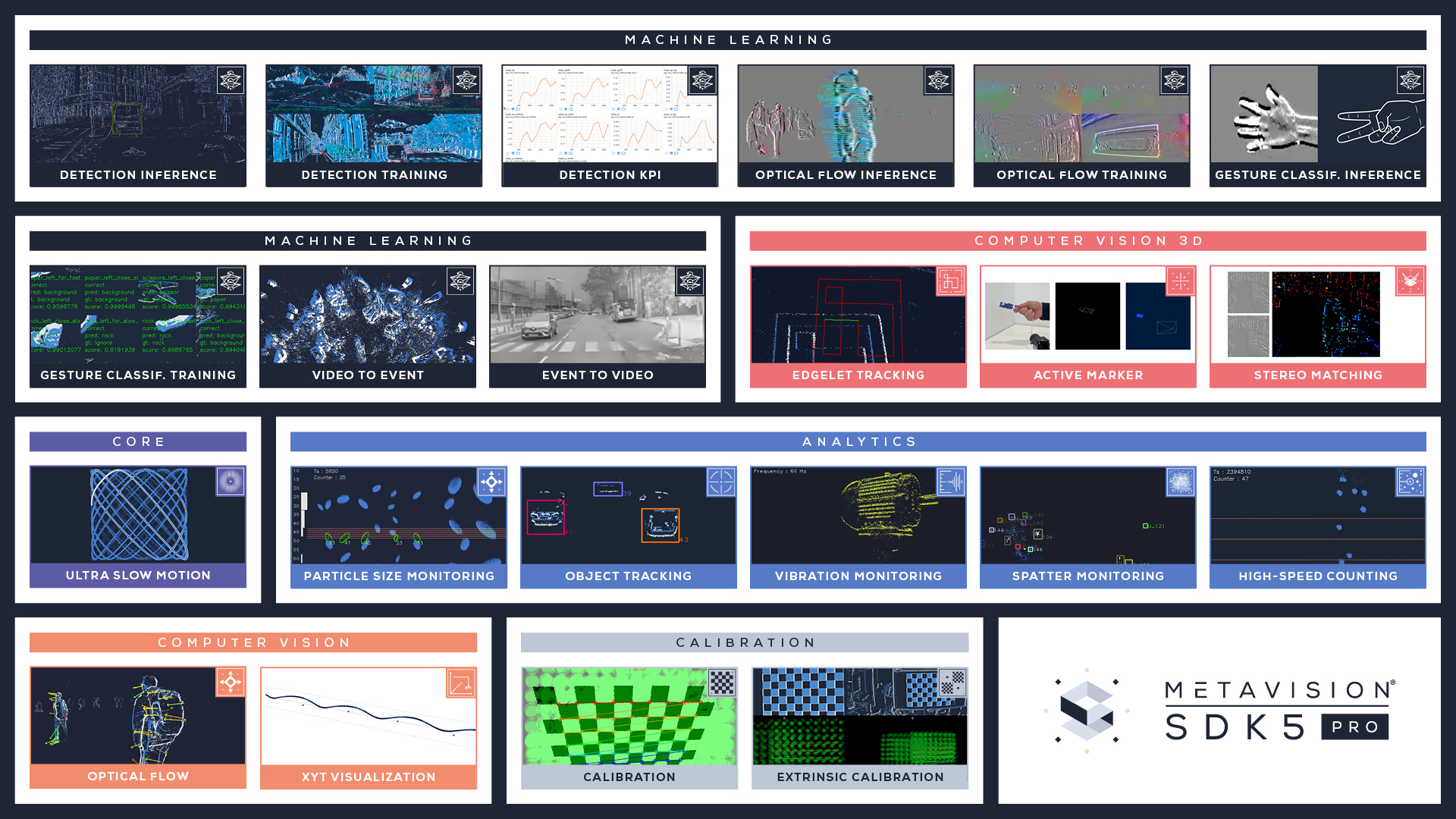

6 MODULE FAMILIES

With a wide range of computer vision fields covered: Machine Learning, Computer Vision, camera calibration, high-performance applications and more, the tool you are looking for is here.

EXTENSIVE DOCUMENTATION & SUPPORT

With 300+ pages of regularly updated content on docs.prophesee.ai, programming guides, reference data, detailed recommendations, get a head start on your product development.

GET RESULTS IN MINUTES

We took over 7 years to perfect the largest collection of pre-built pipelines, extensive datasets, code samples, GUI tools and more, so you could get results in minutes.

APPLICATIONS

TOOLS

HARDWARE

APPLICATIONS

DETECTION INFERENCE

Unlock the potential of Event-Based machine learning, with a set of dedicated tools providing everything you need to start execution of Deep Neural Network (DNN) with events. Leverage our pretrained automotive model written in pytorch, and experiment live detection & tracking using our c++ pipeline. Use our comprehensive python library to design your own networks.

Pretrained network trained on a 15h and 23M labels automotive dataset

Live detection and tracking @100Hz

DETECTION TRAINING

Train your own Object Detection application with our ready-to use training framework. Experiment with multiple pre-built Event-Based Tensor representations, and training network topology suited for event-based Data.

4 pre-built tensor representation

Automated HDF5 dataset generation

Comprehensive training toolbox, including Tailor-made preprocessing, DataLoader, NN architecture, visualization tool and more

VIDEO TO EVENT

Bridge frame-based and event-based worlds with our Video to Event Pipeline. Generate synthetic data to augment your dataset, and partially reuse existing references.

Off-the-shelf, ready-to-use Event simulator

GPU-compatible Event simulator seamlessly integrated

EVENT TO VIDEO

Build grayscale images based on events with this neural network. Spare resources by facilitating automatic Event-based data annotation for tasks already solved in Frame-based.

Off-the-shelf, ready-to-use Event to video converter

Leverage existing frame-based neural networks to obtain labels

DETECTION KPI

Evaluate your detection performance with our Object Detection KPI toolkit in line with the latest COCO API.

mAP, mAR and their variants included

OPTICAL FLOW INFERENCE

Predict optical flow from Event-Based data leveraging our pretrained Flow Model, customized data loader and collections of loss function and visualization tools to set up your flow inference pipeline.

Self-supervised Flownet architectures

Lightweight model

CORNERS DETECTION & TRACKING

This method can generate stable keypoints and very long tracks. Use it in your AR/VR applications, in HDR and fast moving scenes.

Off the shelf ready to use event to corners detector

100Hz detection & tracking

GESTURE CLASSIFICATION INFERENCE

Run a live Rock Paper Scissors game with our pre-trained model on live stream or on event-based recordings.

Off-the-shelf, ready-to-use classifier

GESTURE CLASSIFICATION TRAINING

Train your own object classifier with this tailor-made Event-based training pipeline.

Comprehensive training toolbox, including Tailor-made preprocessing, DataLoader, NN architecture, visualization tool and more

OPTICAL FLOW TRAINING

No ground truth? Leverage our self-supervised architecture. Train your Optical Flow application with our custom-built FlowNet training framework. Experiment with 4 pre-built Flow Networks tailor-made for your Event-based data.

Comprehensive flow training toolbox, including different network topologies, various loss functions and visualization mode

PARTICLE SIZE MONITORING

Control, count and measure the size of objects moving at very high speed in a channel or a conveyor. Get instantaneous quality statistics in your production line, to control your process.

Up to 500,000 pixels/second speed

99.9% Counting precision

OBJECT TRACKING

Track moving objects in the field of view. Leverage the low data-rate and sparse information provided by event-based sensors to track objects with low compute power.

Continuous tracking in time: no more “blind spots” between frame acquisitions

Native segmentation: analyze only motion, ignore the static background

VIBRATION MONITORING

Monitor vibration frequencies continuously, remotely, with pixel precision, by tracking the temporal evolution of every pixel in a scene. For each event, the pixel coordinates, the polarity of the change and the exact timestamp are recorded, thus providing a global, continuous understanding of vibration patterns.

From 1Hz to kHz range

1 Pixel Accuracy

SPATTER MONITORING

Track small particles with spatter-like motion. Thanks to the high time resolution and dynamic range of our Event-Based Vision sensor, small particles can be tracked in the most difficult and demanding environment.

Up to 200kHz tracking frequency (5µs time resolution)

>Simultaneous XYT tracking of all particles

HIGH-SPEED COUNTING

Count objects at unprecedented speeds, high accuracy, generating less data and without any motion blur. Objects are counted as they pass through the field of view, triggering each pixel independently as the object goes by.

>1,000 Obj/s. Throughput

>99.5% Accuracy @1,000 Obj/s.

EDGELET TRACKING

Track 3D edges and/or Fiducial markers for your AR/VR application. Benefit from the high temporal resolution of Events to increase accuracy and robustness of your edge tracking application.

Automated 3D object detection with geometrical prior

3D object real-time tracking

ACTIVE MARKER

Track active markers at ultra-high speed in real-time. With a modulated light scheme and a joint hardware-software design, our Event-Based Vision sensor tracks markers and captures motion in a highly efficient and robust manner.

>1000 Hz pose estimation

Power efficient

STEREO MATCHING

Estimate semi-dense depthmaps by matching natural features in synchronized stereo event streams.

Direct and pyramidal stereo block matching schemes

ULTRA SLOW MOTION

Slow down time, down to the time-resolution equivalent of over 200,000+ frames per second, live, while generating orders of magnitude less data than traditional approaches. Understand the finest motion dynamics hiding in ultra fast and fleeting events.

Up to 200,000 fps (time resolution equivalent)

INTRINSIC CALIBRATION

Deploy your applications in real-life environments and control all the optical parameters of your event-based systems. Calibrate your cameras and adjust the focus with a suite of pre-built tools. Extend to your specific needs and connect to standard calibration routines using our modular calibration toolbox.

Lens focus assessment

5 pattern detection options

Modular camera calibration toolbox

EXTRINSIC CALIBRATION

Combine multiple cameras to enable 3D geometrical reasoning in your application. Calibrate the relative 6DOF pose of your camera using many pattern detection options. Build upon our modular calibration library to integrate in a larger framework or extend to custom use-cases.

5 pattern detection options

Modular camera calibration toolbox

OPTICAL FLOW

Rediscover this fundamental computer vision building block, but with an event twist. Understand motion much more efficiently, through continuous pixel-by-pixel tracking and not sequential frame by frame analysis anymore.

17x less power compared to traditional image-based approaches

Get features only on moving objects

XYT VISUALIZATION

Discover the power of time – space continuity for your application by visualizing your data with our XYT viewer.

See between the frames

Zoom in time and understand motion in the scene

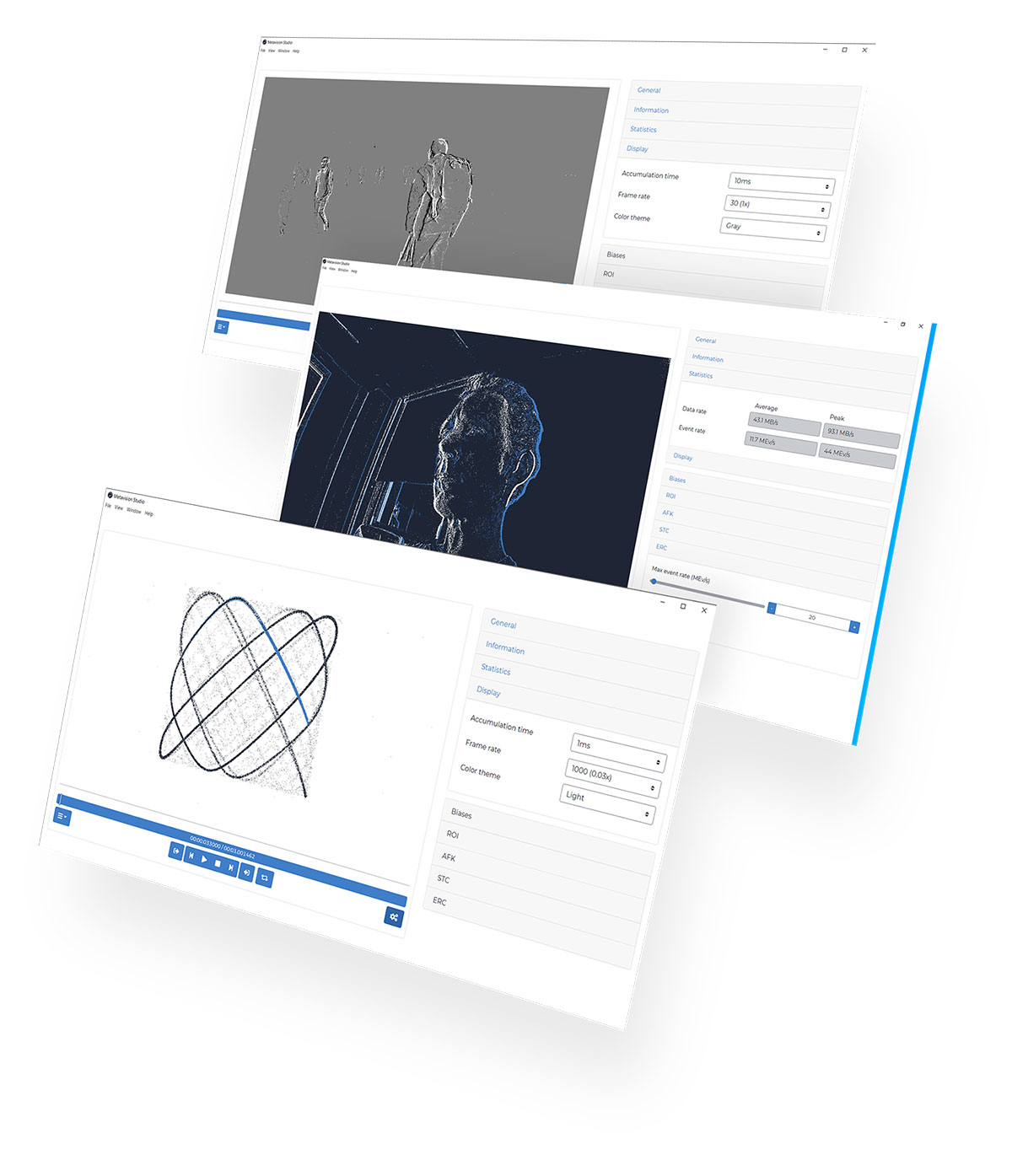

TOOLS

Metavision Studio is the perfect tool to start with, whether you own an EVK or not.

It features a Graphical User Interface allowing anyone to visualize and record data streamed by PROPHESEE-compatible Event-Based Vision systems.

It also enables you to read provided event datasets to deepen your understanding of Event-Based Vision.

Read, stream, tune events

Comprehensive Graphical User Interface

Main features

- Data visualization from Prophesee-compatible Event-Based Vision systems

- Control of data visualization (accumulation time, fps)

- Data recording

- Replay recorded data

- Export to AVI video

- Control, saving and loading of sensors settings

Metavision Studio is the perfect tool to start with, whether you own an EVK or not.

It features a Graphical User Interface allowing anyone to visualize and record data streamed by PROPHESEE-compatible Event-Based Vision systems.

It also enables you to read provided event datasets to deepen your understanding of Event-Based Vision.

Read, stream, tune events

Comprehensive Graphical User Interface

Main features

- Data visualization from Prophesee-compatible Event-Based Vision systems

- Control of data visualization (accumulation time, fps)

- Data recording

- Replay recorded data

- Export to AVI video

- Control, saving and loading of sensors settings

Metavision SDK contains the largest set of Event-Based Vision algorithms accessible to date. High-performance algorithms are available via APIs, ready to go to production with Event-Based Vision applications.

The provided algorithms are available as C++ APIs for highly efficient applications and runtime execution or Python APIs giving access to the C++ algorithms, Machine Learning pipelines and more.

Develop high-performance Event-Based Vision solutions

32 c++ code samples, 112 python classes 63 algorithms, 47 python samples, 24 tutorials and 17 ready-to-use apps & tools

Main features

- Extensive documentation / code examples / training & learning material available

- Runs natively on Linux and Windows

- Compatible with Prophesee vision systems and « Powered by Prophesee » partners products

- A complete API that allows you to explore the full potential of Event-Based Vision in just a few lines of code

/**********************************************************************************************************************

* Copyright (c) Prophesee S.A. *

* *

* Licensed under the Apache License, Version 2.0 (the "License"); *

* you may not use this file except in compliance with the License. *

* You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 *

* Unless required by applicable law or agreed to in writing, software distributed under the License is distributed *

* on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. *

* See the License for the specific language governing permissions and limitations under the License. *

**********************************************************************************************************************/

// This code sample demonstrate how to use the Metavision C++ SDK. The goal of this sample is to create a simple event

// counter and displayer by introducing some basic concepts of the Metavision SDK.

#include <metavision/sdk/driver/camera.h>

#include <metavision/sdk/base/events/event_cd.h>

#include <metavision/sdk/core/algorithms/periodic_frame_generation_algorithm.h>

#include <metavision/sdk/ui/utils/window.h>

#include <metavision/sdk/ui/utils/event_loop.h>

// this class will be used to analyze the events

class EventAnalyzer {

public:

// class variables to store global information

int global_counter = 0; // this will track how many events we processed

Metavision::timestamp global_max_t = 0; // this will track the highest timestamp we processed

// this function will be associated to the camera callback

// it is used to compute statistics on the received events

void analyze_events(const Metavision::EventCD *begin, const Metavision::EventCD *end) {

std::cout << "----- New callback! -----" << std::endl;

// time analysis

// Note: events are ordered by timestamp in the callback, so the first event will have the lowest timestamp and

// the last event will have the highest timestamp

Metavision::timestamp min_t = begin->t; // get the timestamp of the first event of this callback

Metavision::timestamp max_t = (end - 1)->t; // get the timestamp of the last event of this callback

global_max_t = max_t; // events are ordered by timestamp, so the current last event has the highest timestamp

// counting analysis

int counter = 0;

for (const Metavision::EventCD *ev = begin; ev != end; ++ev) {

++counter; // increasing local counter

}

global_counter += counter; // increase global counter

// report

std::cout << "There were " << counter << " events in this callback" << std::endl;

std::cout << "There were " << global_counter << " total events up to now." << std::endl;

std::cout << "The current callback included events from " << min_t << " up to " << max_t << " microseconds."

<< std::endl;

std::cout << "----- End of the callback! -----" << std::endl;

}

};

// main loop

int main(int argc, char *argv[]) {

Metavision::Camera cam; // create the camera

EventAnalyzer event_analyzer; // create the event analyzer

if (argc >= 2) {

// if we passed a file path, open it

cam = Metavision::Camera::from_file(argv[1]);

} else {

// open the first available camera

cam = Metavision::Camera::from_first_available();

}

// to analyze the events, we add a callback that will be called periodically to give access to the latest events

cam.cd().add_callback([&event_analyzer](const Metavision::EventCD *ev_begin, const Metavision::EventCD *ev_end) {

event_analyzer.analyze_events(ev_begin, ev_end);

});

// to visualize the events, we will need to build frames and render them.

// building frame will be done with a frame generator that will accumulate the events over time.

// we need to provide it the camera resolution that we can retrieve from the camera instance

int camera_width = cam.geometry().width();

int camera_height = cam.geometry().height();

// we also need to choose an accumulation time and a frame rate (here of 20ms and 50 fps)

const std::uint32_t acc = 20000;

double fps = 50;

// now we can create our frame generator using previous variables

auto frame_gen = Metavision::PeriodicFrameGenerationAlgorithm(camera_width, camera_height, acc, fps);

// we add the callback that will pass the events to the frame generator

cam.cd().add_callback([&](const Metavision::EventCD *begin, const Metavision::EventCD *end) {

frame_gen.process_events(begin, end);

});

// to render the frames, we create a window using the Window class of the UI module

Metavision::Window window("Metavision SDK Get Started", camera_width, camera_height,

Metavision::BaseWindow::RenderMode::BGR);

// we set a callback on the windows to close it when the Escape or Q key is pressed

window.set_keyboard_callback(

[&window](Metavision::UIKeyEvent key, int scancode, Metavision::UIAction action, int mods) {

if (action == Metavision::UIAction::RELEASE &&

(key == Metavision::UIKeyEvent::KEY_ESCAPE || key == Metavision::UIKeyEvent::KEY_Q)) {

window.set_close_flag();

}

});

// we set a callback on the frame generator so that it calls the window object to display the generated frames

frame_gen.set_output_callback([&](Metavision::timestamp, cv::Mat &frame) { window.show(frame); });

// start the camera

cam.start();

// keep running until the camera is off, the recording is finished or the escape key was pressed

while (cam.is_running() && !window.should_close()) {

// we poll events (keyboard, mouse etc.) from the system with a 20ms sleep to avoid using 100% of a CPU's core

// and we push them into the window where the callback on the escape key will ask the windows to close

static constexpr std::int64_t kSleepPeriodMs = 20;

Metavision::EventLoop::poll_and_dispatch(kSleepPeriodMs);

}

// the recording is finished or the user wants to quit, stop the camera.

cam.stop();

// print the global statistics

const double length_in_seconds = event_analyzer.global_max_t / 1000000.0;

std::cout << "There were " << event_analyzer.global_counter << " events in total." << std::endl;

std::cout << "The total duration was " << length_in_seconds << " seconds." << std::endl;

if (length_in_seconds >= 1) { // no need to print this statistics if the video was too short

std::cout << "There were " << event_analyzer.global_counter / length_in_seconds

<< " events per second on average." << std::endl;

}

}

Metavision SDK contains the largest set of Event-Based Vision algorithms accessible to date. High-performance algorithms are available via APIs, ready to go to production with Event-Based Vision applications.

The provided algorithms are available as C++ APIs for highly efficient applications and runtime execution or Python APIs giving access to the C++ algorithms, Machine Learning pipelines and more.

Develop high-performance Event-Based Vision solutions

32 c++ code samples, 112 python classes 63 algorithms, 47 python samples, 24 tutorials and 17 ready-to-use apps & tools

Main features

- Extensive documentation / code examples / training & learning material available

- Runs natively on Linux and Windows

- Compatible with Prophesee vision systems and « Powered by Prophesee » partners products

- A complete API that allows you to explore the full potential of Event-Based Vision in just a few lines of code

/**********************************************************************************************************************

* Copyright (c) Prophesee S.A. *

* *

* Licensed under the Apache License, Version 2.0 (the "License"); *

* you may not use this file except in compliance with the License. *

* You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 *

* Unless required by applicable law or agreed to in writing, software distributed under the License is distributed *

* on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. *

* See the License for the specific language governing permissions and limitations under the License. *

**********************************************************************************************************************/

// This code sample demonstrate how to use the Metavision C++ SDK. The goal of this sample is to create a simple event

// counter and displayer by introducing some basic concepts of the Metavision SDK.

#include <metavision/sdk/driver/camera.h>

#include <metavision/sdk/base/events/event_cd.h>

#include <metavision/sdk/core/algorithms/periodic_frame_generation_algorithm.h>

#include <metavision/sdk/ui/utils/window.h>

#include <metavision/sdk/ui/utils/event_loop.h>

// this class will be used to analyze the events

class EventAnalyzer {

public:

// class variables to store global information

int global_counter = 0; // this will track how many events we processed

Metavision::timestamp global_max_t = 0; // this will track the highest timestamp we processed

// this function will be associated to the camera callback

// it is used to compute statistics on the received events

void analyze_events(const Metavision::EventCD *begin, const Metavision::EventCD *end) {

std::cout << "----- New callback! -----" << std::endl;

// time analysis

// Note: events are ordered by timestamp in the callback, so the first event will have the lowest timestamp and

// the last event will have the highest timestamp

Metavision::timestamp min_t = begin->t; // get the timestamp of the first event of this callback

Metavision::timestamp max_t = (end - 1)->t; // get the timestamp of the last event of this callback

global_max_t = max_t; // events are ordered by timestamp, so the current last event has the highest timestamp

// counting analysis

int counter = 0;

for (const Metavision::EventCD *ev = begin; ev != end; ++ev) {

++counter; // increasing local counter

}

global_counter += counter; // increase global counter

// report

std::cout << "There were " << counter << " events in this callback" << std::endl;

std::cout << "There were " << global_counter << " total events up to now." << std::endl;

std::cout << "The current callback included events from " << min_t << " up to " << max_t << " microseconds."

<< std::endl;

std::cout << "----- End of the callback! -----" << std::endl;

}

};

// main loop

int main(int argc, char *argv[]) {

Metavision::Camera cam; // create the camera

EventAnalyzer event_analyzer; // create the event analyzer

if (argc >= 2) {

// if we passed a file path, open it

cam = Metavision::Camera::from_file(argv[1]);

} else {

// open the first available camera

cam = Metavision::Camera::from_first_available();

}

// to analyze the events, we add a callback that will be called periodically to give access to the latest events

cam.cd().add_callback([&event_analyzer](const Metavision::EventCD *ev_begin, const Metavision::EventCD *ev_end) {

event_analyzer.analyze_events(ev_begin, ev_end);

});

// to visualize the events, we will need to build frames and render them.

// building frame will be done with a frame generator that will accumulate the events over time.

// we need to provide it the camera resolution that we can retrieve from the camera instance

int camera_width = cam.geometry().width();

int camera_height = cam.geometry().height();

// we also need to choose an accumulation time and a frame rate (here of 20ms and 50 fps)

const std::uint32_t acc = 20000;

double fps = 50;

// now we can create our frame generator using previous variables

auto frame_gen = Metavision::PeriodicFrameGenerationAlgorithm(camera_width, camera_height, acc, fps);

// we add the callback that will pass the events to the frame generator

cam.cd().add_callback([&](const Metavision::EventCD *begin, const Metavision::EventCD *end) {

frame_gen.process_events(begin, end);

});

// to render the frames, we create a window using the Window class of the UI module

Metavision::Window window("Metavision SDK Get Started", camera_width, camera_height,

Metavision::BaseWindow::RenderMode::BGR);

// we set a callback on the windows to close it when the Escape or Q key is pressed

window.set_keyboard_callback(

[&window](Metavision::UIKeyEvent key, int scancode, Metavision::UIAction action, int mods) {

if (action == Metavision::UIAction::RELEASE &&

(key == Metavision::UIKeyEvent::KEY_ESCAPE || key == Metavision::UIKeyEvent::KEY_Q)) {

window.set_close_flag();

}

});

// we set a callback on the frame generator so that it calls the window object to display the generated frames

frame_gen.set_output_callback([&](Metavision::timestamp, cv::Mat &frame) { window.show(frame); });

// start the camera

cam.start();

// keep running until the camera is off, the recording is finished or the escape key was pressed

while (cam.is_running() && !window.should_close()) {

// we poll events (keyboard, mouse etc.) from the system with a 20ms sleep to avoid using 100% of a CPU's core

// and we push them into the window where the callback on the escape key will ask the windows to close

static constexpr std::int64_t kSleepPeriodMs = 20;

Metavision::EventLoop::poll_and_dispatch(kSleepPeriodMs);

}

// the recording is finished or the user wants to quit, stop the camera.

cam.stop();

// print the global statistics

const double length_in_seconds = event_analyzer.global_max_t / 1000000.0;

std::cout << "There were " << event_analyzer.global_counter << " events in total." << std::endl;

std::cout << "The total duration was " << length_in_seconds << " seconds." << std::endl;

if (length_in_seconds >= 1) { // no need to print this statistics if the video was too short

std::cout << "There were " << event_analyzer.global_counter / length_in_seconds

<< " events per second on average." << std::endl;

}

}

HARDWARE

BUY COMPATIBLE HARDWARE

Prophesee USB Evaluation Kits and partner’s cameras Century Arks’ SilkyEvCam – Powered by Prophesee are fully compatible with Metavision SDK

BUILD COMPATIBLE HARDWARE

Through an extensive partnership program, Prophesee enables vision equipment manufacturers to build their own Event-Based Vision products.

Contact us to get access to source code, develop your own hardware or integrate your vision system with Metavision Intelligence.

ACCESS LEVELS

SDK 4.6.2

Freely available binaries of legacy software release- Rights to use and redistribute

- Binaries only

- Metavision Studio

- Open + Advanced modules: HAL, Base, Core, CoreML, Stream, UI, Analytics, Calibration, CV, CV3D, ML

- Base tools and samples

- Compatible products: Metavision EVK3, EVK4, CenturyArks SilkyEVCam VGA/HD/HD Lite, IDS uEye XCP-E and XLS-E series, LUCID Triton2 EVS

SDK5 PRO

The latest software development kit with advanced features- Rights to use, redistribute, modify and port

- Full source code included

- Metavision Studio

- Open + Advanced modules: HAL, Base, Core, CoreML, Stream, UI, Analytics, Calibration, CV, CV3D, ML

- Advanced tools and samples: +Machine Learning samples for detection, gesture classification and optical flow + 3D motion capture + Stereo matching for 3D and much more

- Extensive knowledge center access, including manuals and advanced application notes

- 2H premium support

- Future updates

- Compatible products: Metavision EVK3, EVK4, CenturyArks SilkyEVCam VGA/HD/HD Lite, IDS uEye XCP-E and XLS-E series

OpenEB

Open source core modules of the SDK- Apache 2.0 licensed

- Source code included

- Open modules: HAL, Base, Core, CoreML, Stream, UI

- Compatible products: Metavision EVK3, EVK4, Starter Kit AMD Kria KV260, Starter Kit GenX320 for Raspberry Pi 5, CenturyArks SilkyEVCam VGA/HD/HD Lite, IDS uEye XCP-E and XLS-E series

Looking for an earlier release? Contact us

FAQ

Do I need to buy an EVK to start ?

You don’t necessarily need an Evaluation Kit or Event-Based Vision equipment to start your discovery. You could start with Metavision Viewer included in OpenEB and interact with provided recordings first.

Which OS are supported?

Metavision Intelligence suite supports Linux Ubuntu 22.04 / 24.04 and Windows 10 64-bit for PC X86-64 architecture. For other OS compatibilities, the provided source code allows you to customize your own integrations.

Do I get access to the full source code?

Yes, it comes included with the SDK5 PRO software package.

Source code for the fundamental software bricks is available for free in Open-Source via our OpenEB project.

What can I do with the provided license ?

The suite is provided under commercial license, enabling you to use, build and even sell your own commercial application at no additional cost. Read the license agreement here.

Which Event-Based Vision hardware is supported ?

The software suite is compatible with Prophesee Metavision sensors and USB Cameras. It can also operate with compatible third party products.

Do you provide Python examples ?

Yes, we provide python sample code: https://docs.prophesee.ai/stable/samples.html