PROPHESEE stands at the forefront of

neuromorphic vision sensing

with leading event-based Metavision® sensors

Embedded – Low Power |  VGA – |  VGA – Ultra High Speed |  HD – |  HD - Ultra High Speed | |

Metavision® GenX320 | Metavision® IMX647 | Metavision® IMX637 | Metavision® IMX646 | Metavision® IMX636 | |

| Resolution | 320x320px | 640x512px | 640x512px | 1280×720px | 1280×720px |

| Pixel latency @ 1,000 lux | <150 μs | <800 μs | <100 μs | <800 μs | <100 μs |

| Typical power* (Dynamic with ESP) | 16 mW (MIPI) 5mW (CPI) | 80 mW (MIPI) | 80 mW (MIPI) | 80 mW (MIPI) | 80 mW (MIPI) |

| Low power modes | 36 µW (Ultra-low power mode) | 2.2 mW (Standby) | 2.2 mW (Standby) | 2.2 mW (Standby) | 2.2 mW (Standby) |

| Dynamic range | >140 dB (10mlux - 100 klux) | >110*** dB (30mlux – 100klux) | >86** dB (5 lux – 100 klux) >120*** dB (80 mlux – 100klux) | >110*** dB (30mlux – 100klux) | >86** dB (5 lux – 100 klux) >120*** dB (80 mlux – 100klux) |

| Optical format | 1/5” | 1/4.5” | 1/4.5” | 1/2.5” | 1/2.5” |

| Output format | MIPI, CPI | MIPI, SLVS | MIPI, SLVS | MIPI, SLVS | MIPI, SLVS |

| Size | 4.1 x 3.3mm (Die) 7 x 7mm (CLCC/LGA Package) | 13 x 13mm (LGA package) | 13 x 13mm (LGA package) | 13 x 13mm (LGA package) | 13 x 13mm (LGA package) |

| Variants Available | Bare Die Packaged Optical Flex module | Packaged only | Packaged only | Packaged only | Packaged only |

| Compatible Products | |||||

| Applications |

|

|

|

|

|

*For 1M events/sec

**5 lux is the minimum light level guaranteeing inclusion of all possible operating points.

***Low-light cut-off is the minimum light level guaranteeing nominal contrast sensitivity. For many typical applications, the sensor data are actionable down to this light level. 100000 lux is a virtual high-light limit, not experienced in experiment.

PIXEL INTELLIGENCE

Bringing intelligence to the very edge

Inspired by the human retina, at the heart of Prophesee patented Event-Based Metavision sensors, each pixel embeds its own intelligence processing enabling them to activate themselves independently, triggering events.

SPEED

>10k fps Time-Resolution Equivalent

There is no framerate tradeoff anymore. Take full advantage of events over frames and reveal the invisible hidden in hyper fast and fleeting scene dynamics.

DYNAMIC RANGE

>120dB Dynamic Range

Achieve high robustness even in extreme lighting conditions. With event-based Metavision sensors you can now perfectly see details from pitch dark to blinding brightness in one same scene, at any speed.

LOW LIGHT

0.08 lx Low-Light Cutoff

Sometimes the darkest areas hold the clearest insights. Metavision enables you to see events where light almost does not exist, down to 0.08 lx.

DATA EFFICIENCY

10 to 1000x less data

With each pixel only reporting when it senses movement, event-based Metavision sensors generate on average 10 to 1000x less data than traditional image-based ones.

REVEAL THE INVISIBLE

BETWEEN THE FRAMES

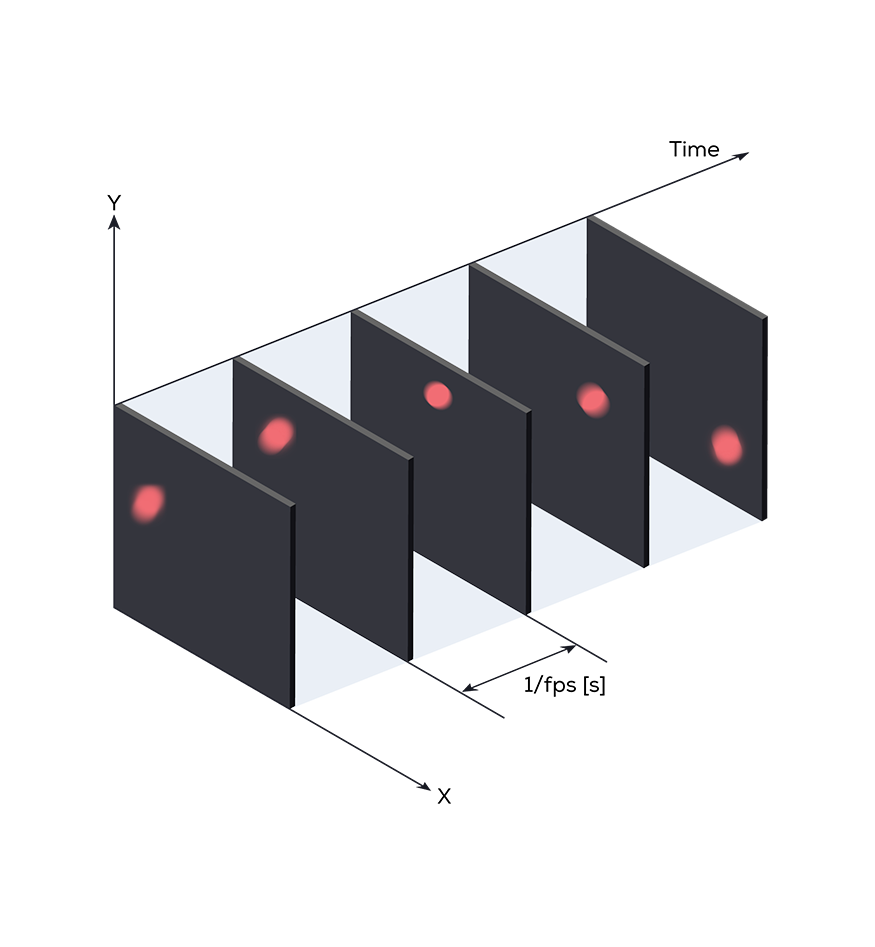

FRAME-BASED VISION

In a traditional frame-based sensor, the whole sensor array is triggered at a pre-defined rhythm, regardless of the actual scene’s dynamics.

This leads to the acquisition of large volumes of raw, undersampled or redundant, data.

On the LEFT, a simulation of Frame-Based Vision running at 10fps. This approach leverages traditional cinema techniques and records a succession of static images to represent movement. Between these images, there is nothing, the system is blind by design.

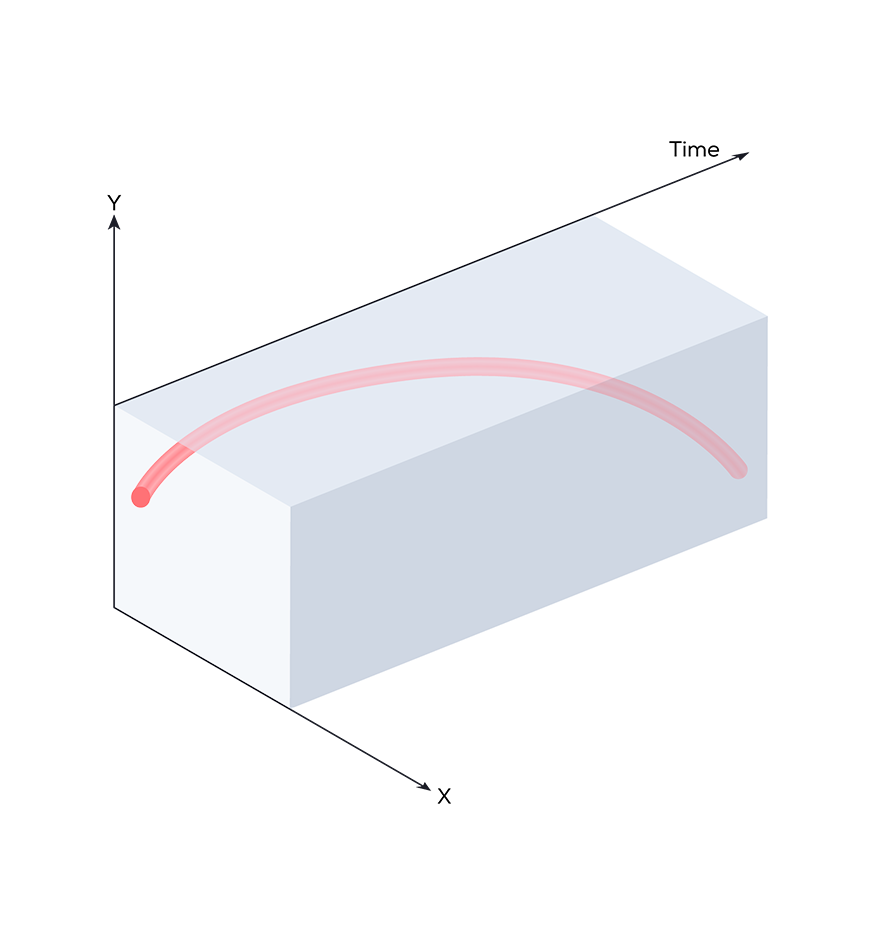

On the RIGHT, the same scene recorded using Event-Based Vision. There is no gap between the frames, because there are no frames anymore. Instead, a continuous stream of essential information dynamically driven by movement, pixel by pixel.

This drastically reduces the power, latency and data processing requirements imposed by traditional frame-based systems.

ADVANCED TOOLKIT

With Prophesee USB evaluation kit purchase comes a complementary access to an advanced toolkit composed of an online portal, drivers, data player and SDK PRO.

We are sharing an advanced toolkit so you can start building your own vision.