TRUSTED BY THOUSANDS OF INDUSTRY PROFESSIONALS

Prophesee is the inventor of the world’s most advanced neuromorphic vision systems.

Composed of a patented Event-based Vision sensor featuring intelligent, independent pixels and an extensive AI library, Prophesee Metavision® system unlocks next-level performance in Parts or Particles Counting and Sizing.

Drastically improve your process monitoring by counting, tracking, estimating trajectory, measuring parts size, shape and their distribution, in real time.

>1,000 Objects/sec

Sizing + Counting Throughput

>10 meters/sec

Linear Speed

99.5%

Accuracy @1,000 obj/sec

Real Time

Processing

Ultra-Low

Datarate

Advanced LED

No need for complex, expensive lighting

The above side-by side illustrates key differences between traditional image-based and event-based approaches. In this scenarios, pellets of varying sizes in the mm range are projected in the field of view of both cameras using 10-bar compressed air.

Parts are both counted and sized at a speed of over 1,000 parts per second and over 10 meters/s. linear speed, with accuracy greater than 99.5% running at full speed.

REAL TIME GAUGING AND PARTICLE SIZE DISTRIBUTION MONITORING

Typical use cases: Food & beverage industry, milled products monitoring, plastic molding pellet distribution…

Access the highest-speed, highest-precision particle size distribution monitoring for your industrial processes, from grain, flour, powders or particles of any size.

Get real-time assessment of distribution to ensure perfect “recipe” mix and composition is guaranteed.

1,000 particles per second throughput

99% counting accuracy

Real-time closed-loop process control

SURFACE CONTAMINATION AND DEFECT DETECTION

Typical use cases: Quality control for continuous process (web inspection, glass manufacturing, plastic film production…)

High-speed continuous film, flat-glass, sheet metal inspection.

Detect in real-time holes, spots, scratches, contaminants and other potential defects at speeds up to 60 meters / seconds.

60m/s defect detection speed

No more complex lighting needed

Easy setup

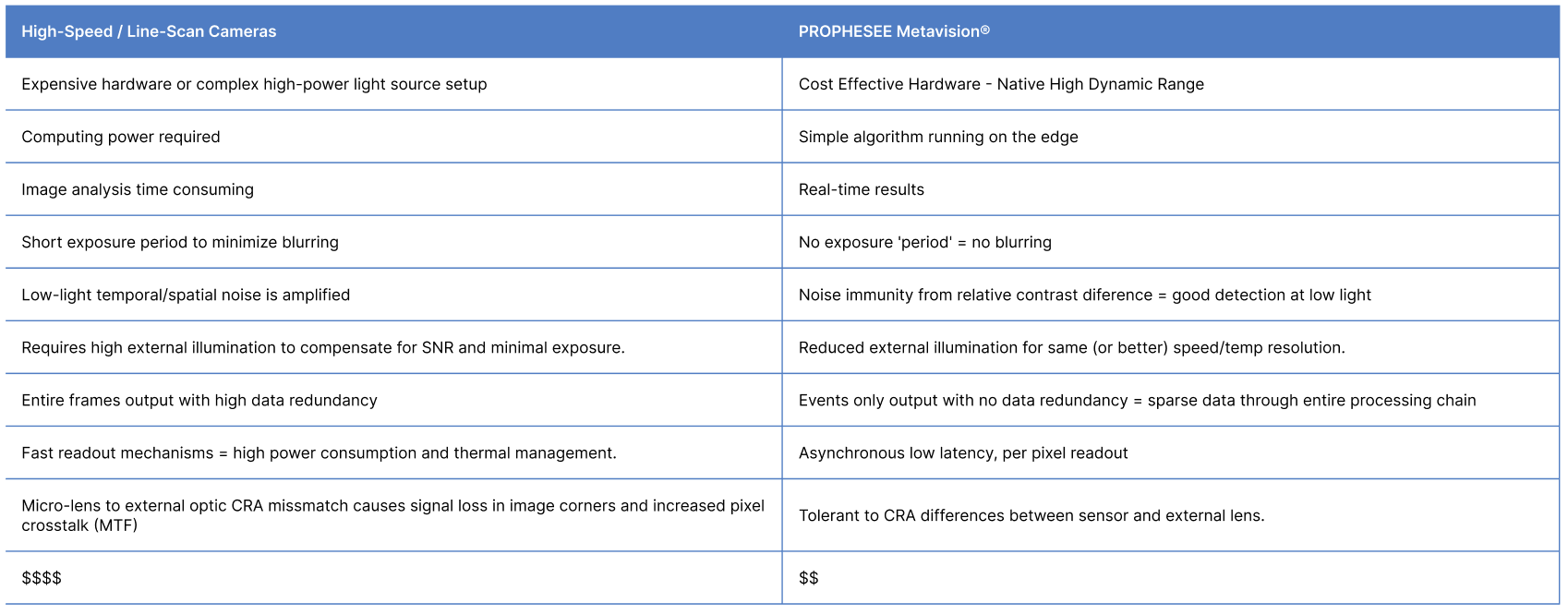

Compared to traditional high-speed or line scan cameras, thanks to breakthrough intelligent pixels, Metavision® sensors capture motion only, at the pixel level, down to the microsecond.

This pixel-level revolution represents a departure from the original concept of image-based capture

and enables for the first time:

Shutter-free

Neither global nor rolling shutter: async. pixel activation

Blur-free

No exposure time

High-Speed

>10k fps time resolution equivalent

1280x720px

Resolution

>120dB

Dynamic Range

0.08 Lux

Low light cutoff

Q&A

What do I need to start ?

You will need the Evaluation Kit 4 HD to run your own live tests. You can start your discovery with our free Metavision SDK and interact with provided recordings first.

What data do I get from the sensor exactly ?

The sensor will output a continuous stream of data consisting of:

- X and Y coordinates, indicating the location of the activated pixel in the sensor array

- The polarity, meaning if the activated event corresponds to a positive (dark to light) or negative (light to dark) contrast change

- A timestamp “t”, precisely encoding when the event was generated, at the microsecond resolution

For more information, check our pages on Event-based concepts and events streaming and decoding.

How can you be “blur-free” ?

Image blur is mostly caused by movement of the camera or the subject during exposure. This can happen when the shutter speed is too slow or if movements are too fast.

With event-based sensor, there is no exposure but rather a continuous flow of “events” triggered by each pixel independently whenever an illumination change is detected. Hence there is no blur.

Can I also get images in addition to events ?

You can not get images directly from our event-based sensor, but for visualization purposes, you can generate frames from the events.

To do so, events will be accumulated over a period of time (usually the frame period, for example 20ms for 50 FPS) because the number of events that occurred at the precise time T (with a microsecond precision) could be very small.

Then a frame can be initialized with its background color at first (e.g. white) and for each event occurring during the frame period, pixels are stored in the frame.

What can I do with the provided software license ?

The suite is provided under commercial license, enabling you to use, build and even sell your own commercial application at no cost. Read the license agreement here.

What is the frame rate ?

There is no frame rate, our Metavision sensor is neither a global shutter nor a rolling shutter, it is actually shutter-free.

This represents a new machine vision category enabled by a patented sensor design that embeds each pixel with its own intelligence processing. It enables them to activate themselves independently when a change is detected.

As soon as an event is generated, it is sent to the system, continuously, pixel by pixel and not at a fixed pace anymore.

How can the dynamic range be so high ?

The pixels of our event-based sensor contain photoreceptors that detect changes of illumination on a logarithmic scale. Hence it automatically adapts itself to low and high light intensity and does not saturate the sensor as a classical frame-based sensor would do.

I have existing image-based datasets, can I use them to train Event-based models ?

Yes, you can leverage our “Video to Event Simulator”. This is Python script that allows you to transform frame-based image or video into Event-based counterparts. Those event based files can then be used to train Event-based models.