Welcome to the Prophesee Research Library, where academic innovation meets the world’s most advanced event-based vision technologies

We have brought together groundbreaking research from scholars who are pushing the boundaries with Prophesee Event-based Vision technologies to inspire collaboration and drive forward new breakthroughs in the academic community.

Introducing Prophesee Research Library, the largest curation of academic papers, leveraging Prophesee event-based vision.

Together, let’s reveal the invisible and shape the future of Computer Vision.

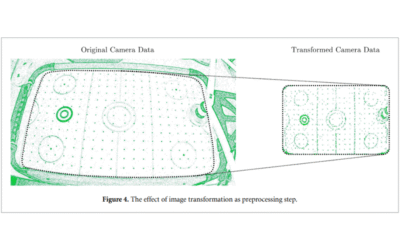

Low-latency neuromorphic air hockey player

This paper focuses on using spiking neural networks (SNNs) to control a robotic manipulator in an air-hockey game. The system processes data from an event-based camera, tracking the puck’s movements and responding to a human player in real time. It demonstrates the potential of SNNs to perform fast, low-power, real-time tasks on massively parallel hardware. The air-hockey platform offers a versatile testbed for evaluating neuromorphic systems and exploring advanced algorithms, including trajectory prediction and adaptive learning, to enhance real-time decision-making and control.

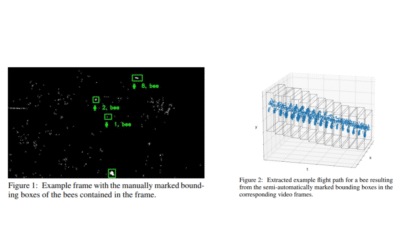

Features for Classifying Insect Trajectories in Event Camera Recordings

In this paper, the focus is on classifying insect trajectories recorded with a stereo event-camera setup. The steps to generate a labeled dataset of trajectory segments are presented, along with methods for propagating labels to unlabelled trajectories. Features are extracted using FoldingNet and PointNet++ on trajectory point clouds, with dimensionality reduction via t-SNE. PointNet++ features form clusters corresponding to insect groups, achieving 90.7% classification accuracy across five groups. Algorithms for estimating insect speed and size are also developed as additional features.

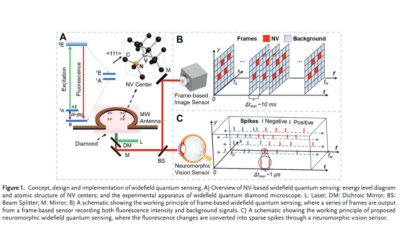

Widefield Diamond Quantum Sensing with Neuromorphic Vision Sensors

In this paper, a neuromorphic vision sensor encodes fluorescence changes in diamonds into spikes for optically detected magnetic resonance. This enables reduced data volume and latency, wide dynamic range, high temporal resolution, and excellent signal-to-background ratio, improving widefield quantum sensing performance. Experiments with an off-the-shelf event camera demonstrate significant temporal resolution gains while maintaining precision comparable to specialized frame-based approaches, and the technology successfully monitors dynamically modulated laser heating of gold nanoparticles, providing new insights for high-precision, low-latency widefield quantum sensing.

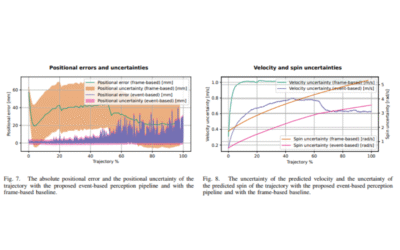

An Event-Based Perception Pipeline for a Table Tennis Robot

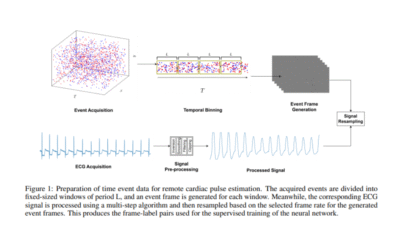

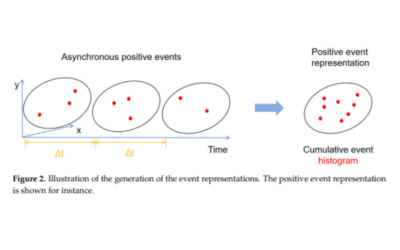

In this paper, the contact-free reconstruction of an individual’s cardiac pulse signal from time event recording of the face is investigated using a supervised convolutional neural network (CNN) model. An end-to-end model is trained to extract the cardiac signal from a two-dimensional representation of the event stream, with model performance evaluated based on the accuracy of the calculated heart rate. Experimental results confirm that physiological cardiac information in the facial region is effectively preserved, and models trained on higher FPS event frames outperform standard camera results.

Contactless Cardiac Pulse Monitoring Using Event Cameras

In this paper, the contact-free reconstruction of an individual’s cardiac pulse signal from time event recording of the face is investigated using a supervised convolutional neural network (CNN) model. An end-to-end model is trained to extract the cardiac signal from a two-dimensional representation of the event stream, with model performance evaluated based on the accuracy of the calculated heart rate. Experimental results confirm that physiological cardiac information in the facial region is effectively preserved, and models trained on higher FPS event frames outperform standard camera results.

Dynamic Vision-Based Non-Contact Rotating Machine Fault Diagnosis with EViT

In this paper, a dynamic vision-based non-contact machine fault diagnosis method is proposed using the Eagle Vision Transformer (EViT). The architecture incorporates Bi-Fovea Self-Attention and Bi-Fovea Feedforward Network mechanisms to process asynchronous event streams while preserving temporal precision. EViT achieves exceptional fault diagnosis performance across diverse operational conditions through multi-scale spatiotemporal feature analysis, adaptive learning, and transparent decision pathways. Validated on rotating machine monitoring data, this approach bridges bio-inspired vision processing with industrial requirements, providing new insights for predictive maintenance in smart manufacturing environments.

Don’t miss the next story.

Subscribe to our newsletter!

INVENTORS AROUND THE WORLD

Feb 2025