EXPLORATION STARTS NOW

Metavision® EVK3D is your perfect entry point to Structured Light Event-Based Depth Sensing, by the inventors of the world’s most advanced neuromorphic vision systems.

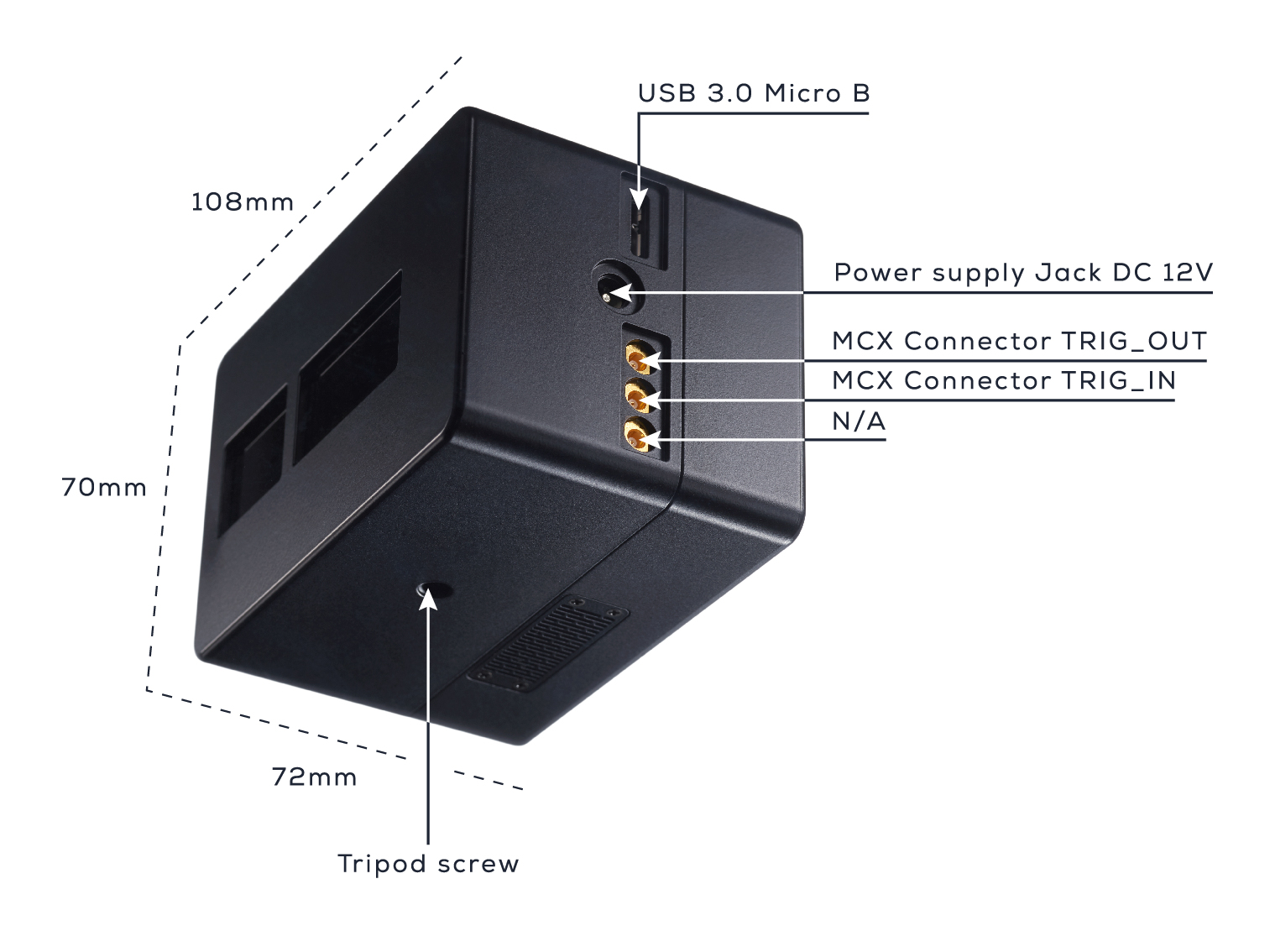

This evaluation Kit features the revolutionary IMX636HD Event-Based Vision sensor, co-developed between Sony and Prophesee, an addressable VCSEL array projector and is powered by a Xilinx Zynq UltraScale+ 6EG processing core.

With its USB3.0 Micro B and MCX connectors as well as its tripod screw, it can interface with existing systems for your fast 3D point cloud needs.

Welcome to our global Inventors Community, we can’t wait to see what frontiers you will be pushing.

1

Create your support account

Register your premium support package bycreating your Knowledge Center account. Download the software, access product documentation, submit support request, access community forum, application notes and other exclusive content.

3

Get Calibration data for you EVK3D

Download the calibration data computed at the factory, specific to your device.

4

Connect the EVK3D to your computer

Connect the provided Power supply. Connect the USB cable (A side first).

5

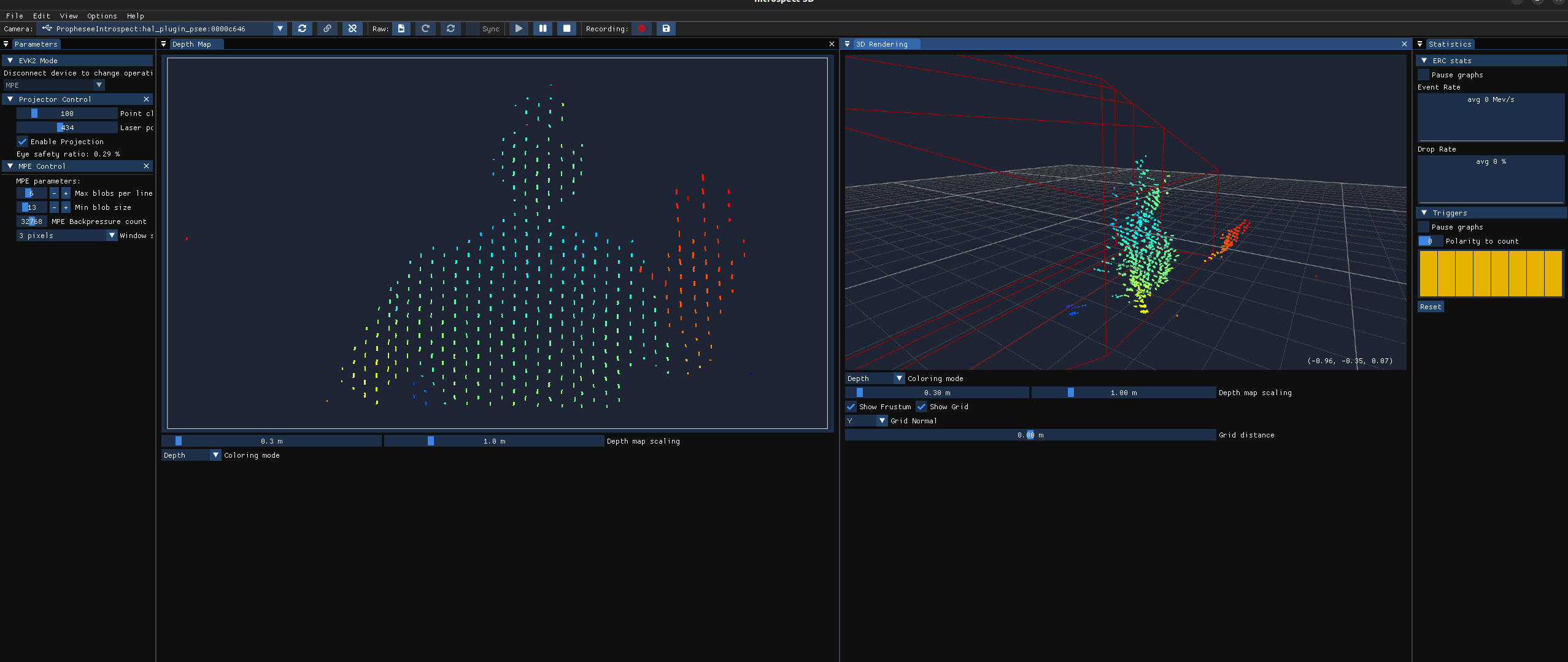

Stream point clouds from your EVK3D

Launch the EVK3D Explorer app and stream your first point clouds.

POPULAR RESOURCES

VIDEO RESOURCES

FAQ

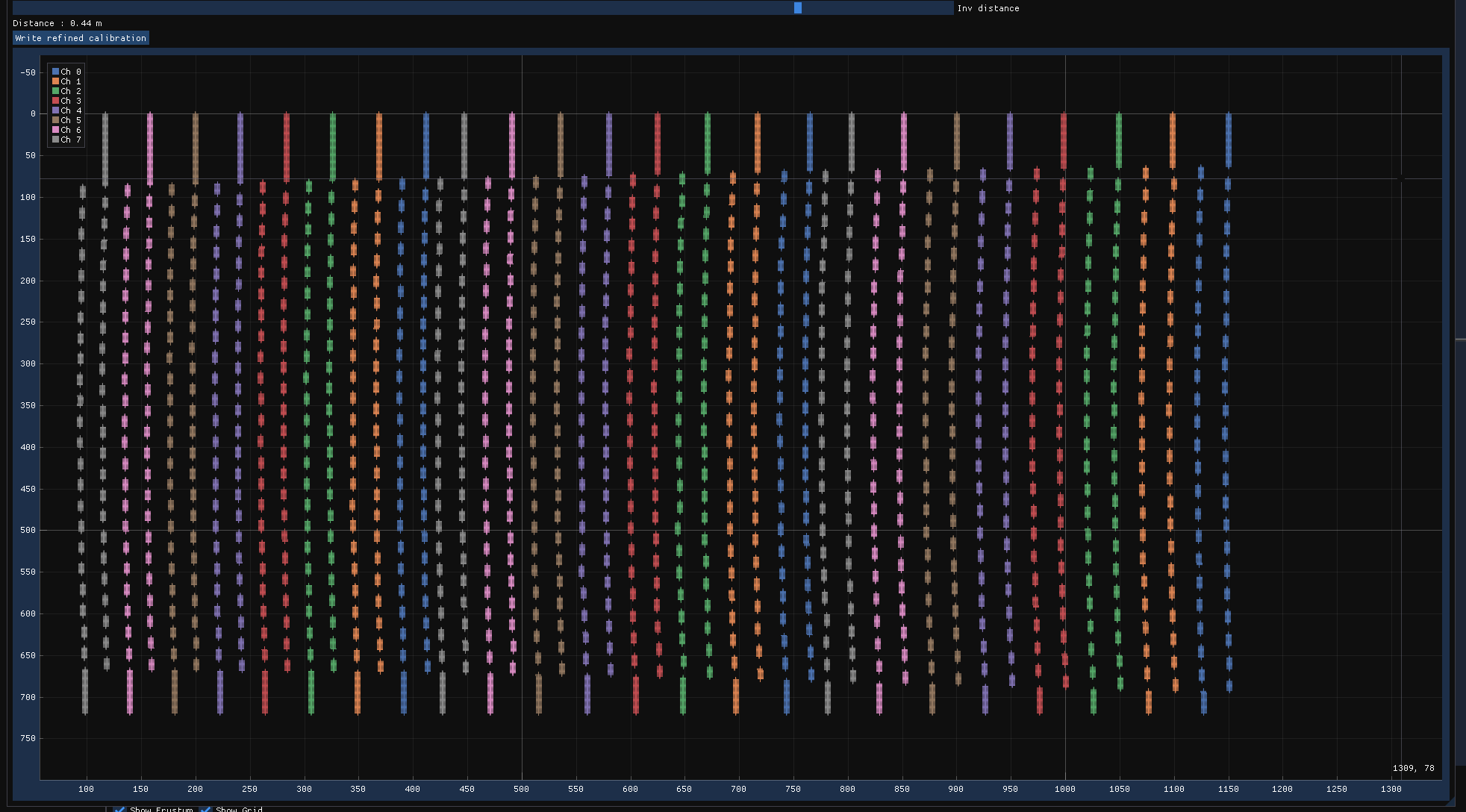

Why use an event-based sensor instead of a frame-based one for structured light?

The low latency capability of the sensor allows to detect ultra-fast light modulation, this enables for time-coded structured light. As opposed to a pseudo-random spatial dot arrangement, ambiguity in the pattern is raised with time encoding. This allows lightweight algorithms to detect and extract light patterns. In addition, only the pixels seeing the light pattern generate events and occupy bandwidth and processing power.

How does the EVK3D compare to a ToF (time-of-flight) system?

The EVK3D computes depth information based on a triangulation principle and not time of flight: the depth measurement error increases with the distance squared and decreases linearly with the baseline.

What is the depth range of the device?

The range limits come from two different considerations :

Depth accuracy range : Depending on the baseline chosen, the same relative depth accuracy is reached at different maximum distances, our system reaches 1.5% RMSE at respectively 0.65, 1.0 and 2.0m for the three variants

Laser detection range : Depending on the light conditions the distance at which the emitted light can be detected by the sensor changes : in bright sunlight conditions the range is reduced.

Why is there a minimum distance?

Minimum distance figure comes from the algorithm implemented in the FPGA pipeline, the disambiguation process works within a certain distance range, closer to this, some projected points will be wrongly disambiguated and provide error points.

Can I change the triangulation algorithm myself?

The algorithm running in the FPGA fabric is one implementation developed by Prophesee, but we are open to helping our customers develop their own version.

Why use VCSEL technology?

VCSEL technology is one of the available choices for a structured light illuminator, it enables small projector footprints and high industrial volumes.

Can I stream events with an EVK3D?

In its current form the EVK3D is meant to evaluate the point cloud data generated, but we are open to helping customers having access to lower levels of processing.

What is the laser class of the EVK3D?

The EVK3D is a class 1 laser device. Its light emission reaches less than 2% of AEL (Accessible Emission limit) described in the norm IEC 60825-1:2014.